Introduction

USERS HAVING TROUBLE WITH EMULE, a Peer-to-Peer (P2P) file-sharing application popular in the 2000s, often sought help on its online message board. Frustrations with slow downloads, confusion about internet connections, and worries about uploading echo throughout the hundreds of posts. On July 23, 2006, one user named thelug wondered why “uploaders in the queue keep disappearing”—a problem, since eMule used a credit system to reward users for uploading files.[1] Other users responded to the thread, reporting the same mysterious problem with uploading. Later, thelug posted again with an account of a call to his or her internet service provider (ISP), Comcast, who assured thelug that it did not block eMule connections and was unsure of the cause of the problem.

Something strange was happening for Comcast customers. Two other users also noted they had issues with Comcast as the thread expanded. Over a week later, on August 11, moderator PacoBell entered the thread with a clue: Comcast did not block eMule, but rather throttled eMule traffic. Throttling allowed users to use P2P applications, but they were given lower priority. When thelug uploaded to eMule, the throttled connection was slow, so his or her downloading peers dropped thelug’s connection in favor of a faster one.

Details about throttling were sparse in 2006. Comcast had not formally announced any changes to their networks, so users like thelug were left to discover them on their own. Comcast turned out to be not the only ISP behaving strangely. P2P users had created wikis to track their problems with ISPs globally. These lists of known issues represented a user-generated investigation into mysterious happenings on the internet, such as eMule developers starting a list of bandwidth-throttling ISPs in 2006. PacoBell added Comcast to eMule’s wiki after posting in thelug’s thread.[2] The wiki included columns for both observed problems (throttling and slow uploads/downloads) and workarounds, but questions remained. Problem descriptions were vague, with observations usually consisting only of “slow uploads/downloads.” Comcast’s entry included the ostensibly more specific error message “Error 10053, dropped upload connections,” but only question marks appeared in the workaround column. It was not the only P2P network experiencing problems. Earlier, the popular BitTorrent client Vuze (then Azureus) started a wiki entitled “Bad ISPs” in 2005. By August 2006, the Vuze list included fifty-two ISPs from Canada, the United States, China, Europe, and Australia.[3]

Solutions were scarce because the reasons for the performance issues remained uncertain until May 2007. Robb Topolski, also known as “FunChords,” posted a detailed study of his problems with Comcast on the popular internet news site DSLReports. Frustrated that he could not share his Tin Pan Alley and ragtime music, he took it upon himself to figure out the problem.[4] By comparing his connection with Comcast to another internet connection in Brazil, he deduced that Comcast had begun installing Sandvine traffic-management appliances in order to dynamically identify new P2P applications and throttle their bandwidth use. He wrote that the Sandvine appliances monitored traffic and interrupted P2P communications when users passed a certain threshold of bandwidth usage.[5] Public interest in Topolski’s claims prompted the Associated Press and the Electronic Frontier Foundation to investigate Comcast. Both found that Comcast had deliberately injected packets into communications between peers in BitTorrent networks to disrupt uploaders’ ability to establish connections.[6] The connection issues experienced by thelug and other Comcast customers were not accidental, but a direct result of Comcast’s attempts to manage P2P networking.

Comcast’s unannounced network-policy changes played a part in over ten years of legal activity related to internet regulation in the United States. The case became the leading national example of a new kind of discrimination by an ISP and a violation of the popular network neutrality principle that called for ISPs to take a hands-off approach to internet content.[7] Six lawsuits were filed against Comcast, including one initiated by Topolski, by June 2008. The lawsuits, eventually consolidated into one class-action suit, alleged that defendants did not receive the high-speed, unrestricted internet access promised by Comcast.[8] After receiving formal complaints submitted by the public-interest groups Free Press and Public Knowledge, the Federal Communications Commission (FCC) investigated Comcast. In response, Comcast challenged the FCC’s jurisdiction to regulate the internet at all. The FCC won the right to regulate the internet—a major victory that led to the adoption of its Open Internet rules on February 26, 2015, which banned throttling and other net-neutrality violations, until a new administration repealed the order with its Restoring Internet Freedom Order on December 14, 2017. The regulatory uncertainty continues as new lawsuits have begun to contest the repeal and individual states have begun to pass their own network neutrality rules.[9]

As the right to control the internet is worked out (at least in the United States), the internet is moving on. Comcast’s treatment of eMule and BitTorrent became the industry standard. While the company did cease throttling P2P traffic, it did not stop trying to manage internet bandwidth in other ways, such as introducing a user-centric traffic management program.[10] From 2005 to 2018, the Vuze list of ISPs managing P2P grew from two ISPs to over one hundred in fifty-six countries. Something had changed, but the shifts were obscured by technical layers and buried deep within the infrastructure of these ISPs. This book is about those changes.

An Internet Possessed

The internet is possessed. Something inhuman seizes its cables, copper, and fiber, but it is not supernatural. It has more in common with Norbert Wiener and FreeBSD than with Beelzebub or Zuul. Engineers and hackers have long imagined the functions (and dysfunctions) of computers as “demonic” in a nod to physicist James Maxwell’s celebrated thought experiment. To question the second law of thermodynamics, Maxwell conceived of a demon tirelessly transmitting gas particles between two chambers.[11] Famed originator of cybernetics Norbert Wiener employed Maxwell’s demon to explain how information processing created meaning in the world. For Wiener, “demons” could be found everywhere working to prevent entropy through their active control of a system.[12] Maxwell’s demon also influenced early computer researchers at MIT, especially those working on the Compatible Time-Sharing System, one of the first time-sharing computer networks. For these early hackers, a “daemon” (note their spelling change) was a “program that is not invoked explicitly, but lies dormant waiting for some condition(s) to occur,” with the idea being that “the perpetrator of the condition need not be aware that a daemon is lurking.”[13] Robb Topolski and other eMule users found their own daemons when diagnosing their connection issues, initially unaware of the daemons’ influence until they looked.

Step into a server room and hear the dull roar of daemons today. Watch the flickering lights on servers representing the frenzy of their packet switching. Behind the banks of servers, pulses of electricity, bursts of light, and streams of packets course through the wires, fibers, and processors of the internet. Daemons animate the routers, switches, and gateways of the internet’s infrastructure, as well as our personal computers and other interfaces. These computers need daemons to connect to the global internet, and they are met online by a growing pandaemonium of intermediaries that specialize in better ways to handle packets.

While the internet is alive with daemons of all kinds, this book focuses on a specific type: the internet daemons responsible for data flows. These daemons make the internet a medium of communication. Their constant, inhuman activity ensures every bit of a message, every packet, reaches its destination.

Where internet researchers would ask who controls these daemons,[14] my book questions how these daemons control the internet. Programmers in the late 1960s introduced what I call daemons during the development of a kind of digital communication known as “packet switching,” which breaks a message down into smaller chunks (or packets) and transmits them separately. Packet switching has numerous origins, and this book focuses on the work of the U.S. government’s Advanced Research Projects Agency. At ARPA, researchers tasked daemons with managing flows of packets, an influence that I call “flow control.” Daemons read packets, identify their contents and type of network, and then vary the conditions of transmission based on the network’s needs, their programming, and the goals of those who program them.

Daemons flourished as ARPA’s experimental packet-switching digital communication system, ARPANET, expanded to be part of today’s internet. Their inhuman intelligence spread through the global infrastructure. Internet daemons and their flow control allow the internet to be a network of networks, a multimedia medium. Streaming, real-time, on-demand, P2P, broadcasting, telephony—all of these networks coexist online. Such diversity is possible thanks to internet daemons’ ability to vary rates of transmission and create different networks as distinct assemblages of time and space. The internet can be a broadcast network, a telecommunication network, and an information service all at the same time because of daemons.

Internet daemons have grown more intelligent since the internet’s inception, and the free-for-all of internet usage increasingly falls under their purposive “daemonic optimization,” as I call it. Whereas early daemons used their flow control to prove the viability of packet switching, now they produce and assign different rates of transmission, subtly altering the rhythms of networks. Daemons can promote and delay different packets simultaneously, speeding up some networks while slowing down others. But it’s not just about being fast or slow; it’s how networks perform in relation to one another. A network might seem delayed rather than reliable due to flow control allocating bandwidth unevenly. Thinking about optimization requires taking seriously science fiction writer William Gibson’s claim that “the future has arrived—it’s just not evenly distributed yet.”[15] Daemon optimization occurs across the multiple networks of the internet. It allows for uneven communication by creating a system that places some nodes in the future while relegating others to the past and now marshals the once-improvisational network of networks under a common conductor. Through their flow control, internet daemons influence the success and failure of networks and change habits of online communication.

As the internet grows more complex and crowded, as its infrastructure becomes overworked or oversubscribed, daemonic optimization has become a technological fix for network owners and ISPs. Since daemons decide how to assign and use finite network resources, their choices influence which networks succeed and which fail, subtly allocating resources while appearing to maintain the internet’s openness and diversity. Networks depend on instant and reliable rates of transmission to function. Modern computing increasingly relies on the “cloud,” where people store their data in distant servers instead of on their home computers.[16] Cloud computing, streaming, downloading, and P2P applications tenuously coexist on limited internet infrastructure. Congestion, delays, lag, and service outages disrupt networking. No one watches a movie that is always buffering. Gamers lose due to lag. Websites succeed only if they load instantly.

Daemonic optimizations matter because they affect how we communicate and participate in contemporary culture, and their impact is not limited to only more marginal media players like eMule. The Canadian Broadcasting Corporation (CBC) felt the consequences of being deprioritized, for instance, during its experiments with the P2P BitTorrent protocol. After distributing its show Canada’s Next Great Prime Minister using the protocol, they discovered their audience largely gave up in frustration after the supposedly short download took hours due to Canadian ISPs throttling P2P traffic.[17] CBC eventually stopped experimenting with BitTorrent. Across the world, delayed downloads are an unfortunate but now common effect of internet daemons’ influence. In the United States, Comcast not only throttled BitTorrent but also entered into partnership with Microsoft to privilege its Xbox Live Gold service.[18] In Europe, 49 percent of ISPs employed some sort of traffic management on their networks.[19] All over the world, daemons are changing how the internet communicates.

This book analyzes these daemons, their flow control, and their optimizations within this global system of communication. Over its seven chapters, I analyze daemons’ flow control from its beginnings in ARPANET to our contemporary moment, when pirates and policy makers struggle over its influence. In what immediately follows, I will introduce the core aspects of my daemonic media studies, specifically the origin of the daemon and my approach to studying their operations, and then move to an overview of the book.

An Introduction to Daemonic Media Studies

What is a daemon? Linux users—present company included—might assert that the term applies only to background programs in an operating system. While there is truth to that claim, I argue that the term is too productive, too evocative, and too much a part of computer history to live only behind the command prompt. (Perhaps the same could be said for operating systems too.) I am not the first to think of the internet as possessed by daemons. Architecture theorist Branden Hookway uses the metaphor of Pandaemonium, John Milton’s capital city of hell in Paradise Lost, to imagine the hidden controls in modern design.[20] Sandra Braman, information policy expert, applies Hookway’s ideas directly to the internet.[21] Daemons have inspired work outside of academia too. Best-selling science-fiction author Daniel Suarez titled his first book Daemon, after the computer program that haunts his cyberthriller.[22] All these works in their own way use the concept of the daemon to understand the software built into today’s information infrastructures.

Internet daemons, in my definition, are the software programs that control the data flows in the internet’s infrastructures. If scholars in communication studies and science and technology studies share a common interest in how media and information technologies form “the backbone of social, economic and cultural life in many societies,”[23] then daemons are vital to understanding the internet’s backbone. Daemons function as the background for the material, symbolic, cultural, or communicative processes happening online.

A daemonic media studies builds on Wendy Chun’s seminal work by looking at those programs between the hardware and the user interface. Chun consciously plays with the daemon’s spectral and digital connotations in order to question notions of open code and transparent interfaces in software studies. Source code, the written program of software, is a fetish, a magical source of causality too often seen as blueprints for computers. Code creates another source, the user. Chun writes: “Real-time processes, in other words, make the user the ‘source’ of the action, but only by orphaning those processes without which there could be no user. By making the interface ‘transparent’ or ‘rational,’ one creates daemons.”[24] Daemons are those processes that have been banished, like the residents of Milton’s Pandaemonium, from a user-centered model of computing. The daemon haunts the interface and the source code but is captured by neither. This book responds to Chun’s call to understand daemons “through [their] own logics of ‘calculation’ or ‘command.’”[25]

Daemons run on home computers and routers, servers and core infrastructure, and particularly the “middleboxes” between them that are usually installed in the infrastructure of internet service providers. They are everywhere. So much so that Braman has called the internet “pandemonic”[26] because:

It is ubiquitously filled with information that makes things happen in ways that are often invisible, incomprehensible, and/or beyond human control—the “demonic” in the classic sense of nonhuman agency, and the “pan” because this agency is everywhere.[27]

The internet might also be called Pandaemonium: daemons occupy a seat of power online, just as Satan sat upon the capital’s throne. Just as Satan ruled his lesser demons, some internet daemons rule, while others follow.

Such a lively sense of the internet aligns with the vibrant materialism described by Jane Bennett’s thinking, drawing on Gilles Deleuze and Félix Guattari, about infrastructures as assemblages and emphasis on the interconnectedness of technical systems. This books shares her interest in thinking through infrastructure by means of materialism and assemblage theory. Bennett described the 2003 North American electrical blackout in her own study of infrastructure. For reasons still not entirely known, the grid shut down. To understand this event, Bennett thinks of the power grid as:

a volatile mix of coal, sweat, electromagnetic fields, computer programs, electron streams, profit motives, heat, lifestyles, nuclear fuel, plastic, fantasies of mastery, static, legislation, water, economic theory, wire, and wood—to name just some of the actants.[28]

To Bennett, this volatile mixture has a “distributive agency” that is “not governed by any central head.” The task for me is to understand the “agency of the assemblage,” not of just one part.[29] Daemons offer a way to embrace the internet as a volatile, living mixture and to think about infrastructure without overstating the “fixed stability of materiality.”[30] Daemons belong to the distributed agency that enables internet communication, the millions of different programs running from client to server that enable a packet to be transmitted.

Another vision of Pandaemonium aids our analysis of this volatile mixture and introduces my approach more fully. Oliver Selfridge imagined a digital world filled with what he referred to as “demons.” He was an early researcher in artificial intelligence and part of the cybernetics community at MIT. Like others there, he had an interest in daemons.[31] Perhaps his infatuation with daemons started when he worked as a research assistant for Wiener at MIT.[32] Perhaps James Maxwell had an influence. Whatever the cause, Selfridge described an early machine-learning program for pattern recognition as a “demoniac assembly” in a paper presented at the influential 1958 symposium “Mechanisation of Thought Processes” held at the National Physical Laboratory.[33] During an event now viewed as foundational to artificial intelligence and neurocomputing, Selfridge explained how a program could recognize Morse code or letters. Selfridge’s work matters to the history of artificial intelligence in its own right, but I borrow his approach here primarily to outline the daemonic media studies used throughout this book. Just as his speculative design inspired future research in artificial intelligence, Selfridge’s program titled “Pandemonium” captures the “artificial intelligence” of today’s internet daemons.

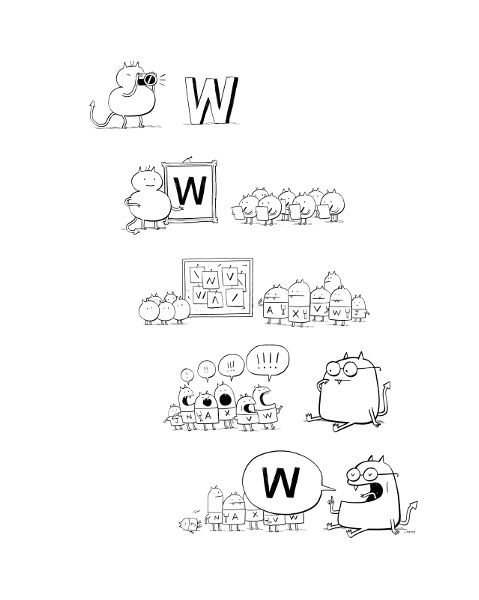

Selfridge’s Pandemonium, illustrated in Figure 1, described how a computer program could recognize letters.[34] He broke each task down by function and referred to them as demons. These demons cooperated by shrieking at each other, forming a “screaming chorus,”[35] a noise that inspired his name for the program.

To observers, Selfridge’s Pandemonium is a black box: letters are inputted and digital characters are outputted. Inside, a frenzied demoniac assembly turns the signal into data. The process of recognizing the letter “W” begins with “data demons,” who, according to Selfridge, “serve merely to store and pass on the data.”[36] These demons convert light signals into binary data and pass it onward. Next, “computational” demons “perform certain more or less complicated computations on the data and pass the results of these up to the next level.”[37] A computational demon looks at the data to identify patterns and then passes those patterns to “cognitive” demons. Selfridge imagined numerous cognitive demons for each letter of the alphabet. How a cognitive demon identifies its letter varies, and the process evolves through machine learning. Selfridge noted: “It is possible also to phrase it so that the [letter demon] is computing the distance in some phase of the image from some ideal [letter]; it seems to me unnecessarily platonic to postulate the existence of ‘ideal’ representatives of patterns, and, indeed, there are often good reasons for not doing so.”[38] Without being too platonic, then, a cognitive demon shrieks when it finds a pattern that matches its letter. The better the match, the louder the shriek, and “from all the shrieks the highest level demon of all, the Decision Demon, merely selects the loudest.”[39] The decision demon then outputs the correct letter, “W,” to end the process.

How Selfridge describes his Pandemonium offers a template for daemonic media studies. The approach begins with an attention to daemons and their specific functions. Together, internet daemons enact a flow control. Daemonic media studies require attending both to the work of each daemon and to their overall effect. For all the talk of a demoniac assembly, Selfridge provided a very well-ordered diagram in his proposal. As I will discuss in chapter 3, daemonic media studies question the arrangement of daemons, the locations both conceptual and physical that they occupy in an infrastructure. Selfridge’s demons have a specific goal in mind: being the most efficient letter-recognizing machine imagined. Each of his demons works in their small way to achieve this optimal outcome. Internet daemons also labor to optimize internet communications. Daemonic media studies, finally, question the distributive agency of daemons to enact optimizations.

Figure 1. Oliver Selfridge’s Pandemonium recognizing the letter “W.” Courtesy of John Martz.

Daemoniac Assemblies

Selfridge instructs my daemonic media studies first by calling attention to the programs themselves. He describes the specific composition or anatomy of his demons, looking at how their individual designs related to their specific functions. Bennett makes a comparable observation about the electrical grid: “different materialities . . . will express different powers.”[40] Each category of Selfridge’s demons is programmed and designed differently and, therefore, boasts distinct capabilities. An “image” demon’s wide eyes aid in translating a letter into information used by other demons; it encodes the letter but does not interpret it. Cognitive demons interpret signals as letters. Selfridge also believed that demons could evolve and develop better ways of completing their tasks over time. Cognitive demons, through competition, would evolve to outperform each other.

Selfridge’s Pandemonium was a lively place with demons quickly spawning to solve the problems they encountered in letter recognition. Selfridge could be said to demonize the work of alphabetic reading by breaking the job into discrete tasks and assigning each task to a specific demon. For problems with multiple possible answers, like feature recognition, Selfridge usually proposed creating more demons. As historian of artificial intelligence Margaret Boden explains, “a relatively useless demon might be removed” and “a new demon might be produced by conjugating two [other demons],” or “a single demon might be randomly ‘mutated,’ and the best survivor retained.”[41]

Selfridge, then, orientates my perspective on the way the internet has been daemonized. How have tasks been broken down into discrete daemons? How have daemons been proposed as a solution to technical issues? How does one daemon beget another when its initial task exceeds its capabilities? The internet, as I will discuss in chapters 1 and 2, started with daemons, and these daemons have proliferated, as we will see in chapters 3 and 4.

Daemonizing relates to critical media studies’ concept of “reification,” which refers to “a process whereby social phenomena take on the appearance of things.”[42] This Marxist term was originally used to describe how, through the process of commodification, the complexity of labor relations becomes obfuscated by the commodity. Beyond appearing like things, reification online creates things, an internet of them. Reification is also at work in my choice to focus on internet daemons rather than those who program them. Approaches from the study of computing bifurcate into studies looking at human coders, on the one hand, and studies focusing on the materiality of code, software, and algorithms, on the other. (The latter could also be seen as part of the “nonhuman turn.”)[43]

Daemons can be described through their materiality, or more specifically through the code and its algorithms. An algorithm is “any well-defined computational procedure that takes some value, or set of values, as input and produces some value, or set of values, as output.”[44] Algorithms usually solve well-known problems like how to order a queue, which route a traveling salesman should take, or how to sort different sound lengths. For example, Selfridge thought that cognitive demons calculating certainty faced a hill-climbing problem. Like “a blind man trying to climb a hill,” how does a demon know when it has reached the highest point, the most certain answer?[45] An algorithm could solve this problem.

Internet daemons implement algorithms, many of which address known problems in computer science. For example, what is the best route to move bits across the internet? This is an old problem and goes by various names. Computer scientists have described it as a problem of flow in networks, while it is considered a transportation logistics problem in operations research.[46] Lester Randolph Ford Jr. and Delbert Ray Fulkerson, both working at the RAND Corporation at the time, defined the problem of routing as follows:

Consider a rail network connecting two cities by way of a number of intermediate cities, where each link of the network has a number assigned to it representing its capacity. Assuming a steady state condition, find a maximal flow from one given city to the other.[47]

They proposed the Ford–Fulkerson algorithm as one solution to this problem. Using graph theory to model the railway network’s makeup, the algorithm calculates the shortest paths between cities. Early internet daemons implemented the Ford–Fulkerson algorithm, as will be discussed, in what became known as “distance vector routing.” Other daemons implement algorithms to manage queues or find the best way to classify traffic. Programmers code their own implementation that runs as part of the software program or daemon.

In contrast to the tremendous work already done in the sociology of algorithms, daemonic media studies focus on the materiality of daemons. Daemons possess the internet, inhabit its messy pipes, and make the best communication system they can imagine. Humans are there every step of the way—intervening most often to fix or break the system—but daemons have to be given their due too.

Diagrams: The Ley Lines of the Internet

Daemonic media studies analyze the complex configurations that organize and influence the distributed work of daemons. Selfridge’s demons cooperated in a certain layout. Indeed, he describes his Pandemonium as an “architecture”: it fixes and outlines both the interactions between demons and their functions in the overall program. The decision demon relies on the cognitive demon, who, in turn, relies on the computational demon. Multiple demons work on the same problem. Cognitive demons can work on different words or the same words at the same time. Selfridge’s approach foreshadows parallel processing in computer science, but it also illustrates the complex designs used to implement distributive agency.

I address this configuration of daemons through the concept of the “diagram.” The diagram, a concept popularized by Michel Foucault, Gilles Deleuze, and Alexander Galloway, describes “a map of power—diagrammatics is the cartography of strategies of power.”[48] Selfridge’s Pandemonium has a diagram, cartoonishly approximated in Figure 1, and similar diagrams exist for the internet. For Deleuze, the diagram constructs the real,[49] and these internet diagrams likewise help compose the built infrastructure, guiding the design of cables and switches and edges and cores.

Diagrams arrange daemons and an infrastructure’s flows of information, influencing flow control in two ways. First, diagrams arrange how daemons manipulate the flows of packets, stipulating where they can intervene. Second, diagrams influence how daemons share information among themselves. The overall composition is uneven, which is another point shared by Bennett, who suggests that “power is not distributed equally across [an assemblage’s] surface.”[50] Instead, diagrams create a hierarchy between daemons. If Pandaemonium served as the capital of hell, then some daemon sits on the throne while others sit at its feet. In Selfridge’s Pandemonium, the decision demon has the final word. The same applies to internet daemons, with some being more influential than others, occupying the space of flows, so to speak.

Diagrams not only arrange daemons but also create abstract locations in which to conjure new ones. In a daemonic media studies, the diagram conceptually prefigures daemonizing by creating spaces and possibilities for daemons to occupy. The evolving diagram of the internet, described in chapter 3, enables new daemons to occupy the infrastructure. ARPANET, for example, proposed using computers in the infrastructure. Its diagrams created the possibility for computers, known as Interface Message Processors (IMPs), to be built and run in its infrastructure. Other diagrams led to more and more possibilities for daemons. Importantly, however, the diagram does not determine subsequent daemons in such a way that daemons become unimportant. As will be discussed, daemons interpret their own functions, locations, and algorithms.

Daemonic Optimization

Finally, Selfridge’s Pandemonium illustrates distributive agency under control. As communication scholar Ian Roderick writes, Selfridge’s Pandemonium distributive agency worked through “the delegation of tasks amongst disparate actors working individually in a piecemeal fashion to produce more complex patterns of behavior.”[51] Such distributed agency, which Galloway called “protocological,” now seems commonplace, but at a time of large monoliths and batch computers, Selfridge was only the early inspiration for ideas about computing in the form of distributed, multiagent systems. Even beyond Selfridge’s own personal importance in the history of artificial intelligence, his Pandemonium is significant for encapsulating the design decisions of early packet switching. The communication system functioned by allocating work to individual programs whose collective action kept the system running.

Internet daemons collaborate to enact what I have been calling “flow control.” The virtual work of daemons is integral to the actual conditions of online communication, which their distributive agency enables. By identifying packets and contextualizing these bits into networks, daemons can vary the conditions of transmission with greater granularity. Some daemons specialize in packet inspection; others manage the selection of routes or allocate bandwidth. A few daemons attempt to manage the overall state of the system. Together, packet by packet, daemons create the conditions of possibility for communication.

My use of “control” deliberately situates this book in ongoing discussions about communication and control. My theorization of control begins with Deleuze, though I draw also on a broader literature that ranges from James Beniger to Wiener. Deleuze notices subtle mechanisms of power overtaking Foucauldian disciplinary societies, what Deleuze calls “societies of control,”[52] and he describes emerging mechanisms[53] that modulate or change dynamically to adapt to varied inputs or tactics. He gives the example of a city pass card that varies entry depending on the time of day: “What counts is not the barrier but the computer that tracks each person’s position—licit or illicit—and effects a universal modulation.”[54] The concept of control, then, orients critical inquiry toward the more immanent properties of a system that defines the limits of freedom and questions the modulations of control that establish these limits. Subsequently, Galloway focuses on the internet protocol suite as the key mechanism of control in decentralized networks (as well as an example of a “protocological” society).[55] More recently, Scott Lash reaches similar conclusions when he advocates a shift in cultural studies from focusing on hegemony and its tendency toward epistemology to a posthegemonic age emphasizing ontology. The study of ontology in a “society of pervasive media and ubiquitous coding” should focus on “algorithmic or generative rules” that are “virtuals that generate a whole variety of actuals.”[56] The modulations of control might be seen as the generative rules that allow for communication.

Flow control serves a higher power: it works to realize an optimal state or optimality for the network of networks. Daemons, in other words, optimize a communication infrastructure shared by many networks with their own demands. In engineering, optimization refers to “the process of making [something] as functional, effective, or useful as possible,” as well as to “the mechanisms utilized towards these objectives of improved performance.”[57] Optimization “problems” refer to the challenge of formulating the problem and composing an algorithm to solve that problem in the most effective manner possible.[58] Etymologically, “optimization” derives from the same root as “optimism.” To optimize is to be optimistic, to believe in an ideal solution. These problems and hopes exist throughout engineering, ranging from finding the optimum layout of transistors on a computer chip to solving complex organizational issues, such as deciding the best way to route telephone calls to maximize a limited number of lines.[59] Frederick Taylor’s studies into scientific management sought, for example, the optimal shovel load to maximize the labor exerted from the worker.[60] Donna Haraway, in her “Cyborg Manifesto,” describes a move in communication sciences toward “the translation of the world into a problem of coding, a search for a common language in which all resistance to instrumental control disappears and all heterogeneity can be submitted to disassembly, reassembly, investment and exchange.”[61] Haraway’s historical note signals the broader intellectual currents that led to internet daemons. The evolution of the internet involves the coding of information and the formulation of problems related to information flows. Internet daemons reflect and enact an optimism that there might be an optimal way to organize an increasingly ubiquitous medium like the internet.

In this book, I intentionally blur the lines between optimization and the sociopolitical. The daemons encountered in this book were developed and designed to solve the challenge of optimizing communication in a network of networks. As ARPANET developed, it gave rise to optimization problems for the packet-switching model of communication. These problems developed out of utopian ideas from the likes of J. C. R. Licklider, who imagined a new era of “man–computer symbiosis.” Licklider’s optimism manifested as optimization problems. Kleinrock described packet switching as an optimal solution to the problem of poorly utilized network resources. If “a privately owned automobile is usually a waste of money” because “perhaps [90] percent of the time it is idly parked and not in use,” then a private network is also inefficient.[62] Packet switching was an optimal solution because it creates a common data carrier, which maximizes resource utilization. This study of internet daemons is one investigation into an optimization problem, unpacking the ways that computer programs have tried to solve deeply social and political questions about how to share a communication infrastructure between various networks competing for limited resources.

As I explain over the book, advances in optimization do not necessarily include improved ways of deciding the optimal. Better control does not lead to better governance. Rather, optimization asserts those complex, often political, problems of managing the internet as technical ones.[63] Take for example how Leonard Kleinrock, a central figure in the development of ARPANET, described the flow control problem: “the problem of allocating network resources to the demands placed upon those resources by the user population.” In Kleinrock’s view, this was a strictly technical problem, distinct “from the ‘softer’ social, political, legal and ecological problems.”[64] This early appearance of the desire to separate the technical from the political and social recurs in contemporary network neutrality debates. Even the now-defunct FCC report on “Protecting and Promoting the Open Internet” noted, “in order to optimize the end-user experience, broadband providers must be permitted to engage in reasonable network management practices.”[65]

Daemonic media studies overall contribute to the study of media infrastructures, which calls for “interdisciplinary engagements” that explore “issues of scale, difference and unevenness, relationality, labor, maintenance and repair, literacy, and affect.”[66] More specifically, examining the internet as infrastructure aligns with what information and communications scholar Christian Sandvig calls “new materialism,” an approach that revels in the forgotten importance of “roads, power systems, and communication networks; wires, signals, and dirt.”[67] The daemon provides one way to navigate the software side of infrastructure of this new materialism.

The Structure of the Book: A Study of Internet Daemons and Flow Control

Over its seven chapters, this book elaborates these different components of daemonic media studies. A natural starting place would be: how did demons become associated with computers in the first place? The first chapter explores the intellectual impact of a physicist’s thought experiment known as “Maxwell’s demon” on early computers and digital communication. The legacy of Maxwell’s demon is complex and multifaceted, and interested readers can find a good introduction to it in the work of Philip Mirowski and Katherine Hayles.[68] Within the sciences, James Maxwell’s idea inspired great debate that threatened the foundation of thermodynamic theory. Rather than exorcising the demon, these debates culminated in a new theory of information, within which the demon symbolized the idea of a general information processor. Maxwell’s demon became part of a larger trend known as the cyborg sciences, which refract many disciplines through computers and computational modeling.[69] Game theory, operations research, information theory, and cybernetics all might be seen as cyborg sciences. These approaches transformed economics, gender, urban planning, possibly political science, and communications. A complete history of the cyborg sciences can be found elsewhere.[70] A connecting thread among these works is the idea that Maxwell’s demon contributed to the conceptual shifts that animated the development of systems of control, digital networks, and computers.

The first chapter of the present book tracks this shift from demons to daemons by exploring how Maxwell’s demon inspired digital communication and control: early computer networks relied on innovative digital computer infrastructures to enable these new forms of communication, and in these infrastructures, the demon made a leap from being an imaginary figure to being a real program running in an operating system. Maxwell’s demon, finally, offers a way to understand the constant work that programs do to keep infrastructures online. Researchers at MIT were, in fact, the first to make this connection, calling their programs daemons as a nod to Maxwell.

The second chapter traces the materialization of daemons at the Information Processing Techniques Office (IPTO), a part of the U.S. government’s ARPA. While research into packet-switching communication was global, ARPANET was a key point of convergence. Early researchers associated with IPTO sought new digital-first communication channels to better share limited computer resources. This research led to the development of packet switching, the model for internet communication, and to the belief that embedding computers in the infrastructure was the best way to build a packet-switched communication system. These computers would eventually host specific programs managing digital communication—the first internet daemons. The chapter pays special attention to Donald Davies, one of the inventors of packet switching. He described packet switching as nonsynchronous communication, which means that the underlying infrastructure allows for many networks simultaneously. This capacity would allow the internet to function as a multimedia medium, a network of networks. To achieve nonsynchronous communication, researchers involved with IPTO built ARPANET’s communication infrastructure out of computers. These Interface Message Processors, the IMPs, were the first internet daemons. IMPs had the difficult task of creating the optimal conditions of transmission in this early network of networks.

The third chapter follows this proliferation by tracing the evolution of packet switching and computer networking. I use the concept of a diagram to conceptualize the nascent internet’s shifting abstract arrangements of space and power. Changing diagrams gave daemons new roles to fill, gradually including components for daemons to act at the edges, middle, and core of the emerging internet. The growing complexity of packet-switched communication enabled the internet to act as an infrastructure that supports more than one type of computer network. The internet is significant for being able to provision multiple networks, including home-brewed electronic bulletin boards, early computer discussion groups, and a pirate underground. All these networks converged on the internet’s infrastructure, making the life of daemons difficult as they had to decide how best to manage all these networks.

If the internet is full of daemons, what do they do? The fourth chapter journeys deeper into their world. I use the metaphor of the congress of demons, Pandaemonium, drawn from computer science, to describe an infrastructure filled with daemons. This chapter journeys through the internet as Pandaemonium, and I have divided it into two parts. The first unpacks the technical operation of today’s internet daemons. Daemons inspect, route, and queue packets, as well as coordinate with each other. The second part returns to the problem of daemonic optimization to more closely examine two specific kinds of optimization at work in the contemporary internet: nonsynchronous and polychronous. These optimizations resonate with concerns around the internet’s political economy and governance (often related to the idea of network neutrality), but daemons have an autonomy from regulations and even their owners. This second part explores this world of daemons through a more thorough discussion of the case of Comcast. This tour of the internet’s Pandaemonium introduces us to the daemons running P2P networks, sharing cable lines, and optimizing the internet to avoid congestion. It reveals the current operations of polychronous optimization and explores some of its future possibilities.

All this attention to internet daemons might make us miss the feelings they inspire in users. What is the experience of being delayed? What does it mean to suffer from buffering? In the fifth chapter, I theorize the affective influence of daemons and how ISPs use advertising to articulate these affects into structures of feeling. Flow control exerts an affective influence by disrupting the rhythm of a network, which frustrates users and their attention. Polychronous optimizations create broad structures of feeling with uneven distributions of anxiety and exuberance. The resulting modulations—prioritized and demoted, instant and delayed, and so on—have a diverse and often deliberate affective influence that manifests as valuable feelings sold by ISPs. Taken together, the five commercials analyzed in this chapter map out the emotional relations between users and the internet from the initial feelings caused by flow control to their more deliberate use and to a reconsideration of the desires that keep people under the spell of internet daemons.

What can be done about this daemonic influence? The sixth chapter explores the tactics associated with The Pirate Bay (TPB) and the Swedish propiracy movement. Their commitment to an internet without a center prompted them to find ways to elude flow control. In this chapter I analyze two of their tactics: first, an approach of acceleration focused on decentralization and popularization and, second, an escalation of tactics through the use of virtual private networks (VPNs). Their activities represent another side in the net-neutrality debate, one put forward not by policy makers but by “hacktivists” and pirates who seek to protect and foster their own competing vision of the internet.

How can daemons be made more evident? Given their influence, how can their work be rendered public and perhaps governed? This seventh chapter explores how publics form and learn about daemons. Much of the controversy surrounding traffic management results from publics. As seen above, Comcast’s interference with BitTorrent traffic came to light only after hackers analyzed their packets and discovered the invisible work of daemons. In this chapter, I draw on a case from Canada to explore one of the first examples of network neutrality enforcement. This chapter tells the story of Canadian gamers affected by flow control and their two-year journey toward resolution. In the process, they demonstrated the viability of John Dewey’s theory of “publics” as a basis for further research investigating both daemons and other digital media.

I conclude with a look toward the future of daemons. What could it mean to embrace the daemon as an analytical tool to study digital media? My conclusion offers a summary of some of the key points found in this book. I also end on a more speculative note as I consider the role of daemons in larger operating systems. How does their flow control cooperate with other kinds of control? How does this ecology of control enable complex systems online today? Daemons, I argue, offer a first step toward understanding these networked operating systems.

The book as a whole offers a different perspective on net neutrality. In the wider public, the issue of flow control has largely been addressed as a matter of network neutrality, a normative stance preventing carriers from discriminating on the basis of content.[71] Although net neutrality has emerged as the popular focus of the debate, it is only one answer to the problem posed by flow control. This book analyzes the root of the problem: the influence of internet daemons. That influence must be made more transparent so as to be better regulated, and in the pages that follow, I will explain how daemons, while they may never be neutral, can be accountable.

My investigation into daemons further relates to ongoing concerns about algorithms as a distributed and dynamic form of social control. Algorithms, as a technical catchall, have become a focal point for accountability and discrimination in a digital society.[72] The study of daemons then connects with a growing literature discussing the implications of algorithms to culture,[73] the public sphere,[74] journalism,[75] labor,[76] and theories of media power.[77] For example, Tarleton Gillespie, continuing his interest in digital control, investigates what he calls “public relevance” algorithms, which are involved in “selecting what information is considered most relevant to us, a crucial feature of our participation in public life.”[78] Algorithms also control public attention. Taina Bucher and Astrid Mager consider this designation of relevance in depth in their respective discussions of Facebook and Google.[79] Algorithms make certain people or posts visible or invisible to the user, which means that algorithmic media like Facebook influence matters of representation or inclusion. Martin Feuz, Matthew Fuller, and Felix Stalder investigated how Google’s algorithms tailor results depending on who is doing the searching.[80] Gillespie also stressed that algorithms calculate publics and opinions through defining trends or suggesting friends. Social activity—what is popular and who to know—involves the subtle influence of algorithms and addresses the link between material and symbolic processes that interests scholars of media and information technologies.

Internet daemons represent a specific instance of algorithmic power amid this general concern, and their power raises enduring questions about media, time, and communication that are complementary to worries about algorithmic and data discrimination. If media create shared spaces and times, then the internet daemon provides a new concept to describe multimedia communication and the power found in controlling the flows of information within digital infrastructure.

My hope in writing this book is that its study of the internet becomes a model for daemonic media studies, one that attends to the software side of media infrastructures.[81] My approach borrows the concept of the daemon from computer science. There, the daemon is a novel figure to understand the agency of software. Daemons keep the system running until they break down. They are a core technique of control at work in media infrastructure. At times mischievous, daemons also represent being out of control. Both their capacities and their limits help them analyze contemporary digital control. They are also a way to understand the organization of an infrastructure. Daemons are arranged not only in an infrastructure’s abstract diagram but also in its server rooms, control centers, and other points in physical space. These arrangements organize a control plane composed of autonomous daemons working in cooperation, following and frustrating each other. My approach attends to both these daemons and their organization as a kind of operating system that optimizes infrastructural activity. Daemons desire some state of the optimal that they are always working to achieve. At a time when achieving the optimal justifies everything from what shows up in Facebook’s news feed to which variety of kale to add to your smoothie, my book explores a particular kind of optimization through internet daemons. My framework hopefully has a utility beyond this book and helps to explore these other optimizations at work today.