2

Possessing Infrastructure

Nonsynchronous Communication, IMPs, and Optimization

DAEMONS HELPED INSPIRE what we now call packet switching. Donald Davies, a researcher at the prestigious National Physical Laboratory (NPL) in the United Kingdom and one of the inventors of packet switching, discovered daemons, the same ones mentioned in the previous chapter, when he visited Project MAC (Man And Computer) at Massachusetts Institute of Technology (MIT). On a research trip in May 1965, he observed the Compatible Time-Sharing System (CTSS) and noted that a “central part of the system” was a “scheduling algorithm” that allowed users to share time on an IBM 7094 computer. While Davies did not mention it in his report, this algorithm was likely part of the Supervisor software program’s daemon that allocated computer resources. Davies saw great promise in the daemon, though he also commented that “there does not seem to have been much development of the scheduling algorithm.”[1] It is unclear whether this daemon inspired Davies to think of extending the principles of time-sharing into communication infrastructure or, rather, it served only as a model for describing his new design for a digital communication infrastructure. Either way, in 1966, Davies wrote a “Proposal for a Digital Communication Network” that become a foundation of packet switching. Davies envisioned a new, intelligent infrastructure for computer communication. Like a scheduling algorithm accommodating multiple users, Davies proposed building an infrastructure that used programs to accommodate multiple networks on the new digital communication system.

How did daemons make their way from Davies’s proposal to the internet? Davies, for his part, faced difficulties winning the British government’s support. He did, however, influence a team of researchers working in the heart of the American military-industrial-academic complex. In 1967, at a symposium organized by the Association for Computing Machinery (ACM) in Gatlinburg, Tennessee, Davies’s work found a receptive audience in researchers from the Information Processing Techniques Office (IPTO), which was part of the Advanced Research Projects Agency (ARPA). The IPTO researchers were presenting their own work on a new communication system called ARPANET. Soon after the meeting, the researchers aggregated ideas from Davies and numerous others working in computer communication to build this actual experimental communication system.

Central to the history of the internet, IPTO was a key site in the development of packet switching, though the ideas generated there are an example of the product more of a general intellect than of a lone inventor. The names of all the collaborators are too numerous to mention here, and even if an attempt at listing them were made, omissions are inevitable. ARPANET’s history has been well covered,[2] but its specific relationship to control, networking, and infrastructure requires greater attention to fully account for the influence of daemons.

Through ARPANET, daemons possessed the internet’s core infrastructure. The computers embedded in the network’s infrastructure were called Interface Message Processors (IMPs). Daemons resided on IMPs and handled the logistics of sending and receiving information, and Davies helped researchers at the IPTO formalize these functions. As mentioned above, he proposed a digital infrastructure designed for nonsynchronous communication. Where early computer networks (whether batch, real-time, or time-sharing) synchronized humans and machines, his nonsynchronous communication system could modulate transmission to facilitate many kinds of networking. This shift (foundational to the design of packet switching) tasked daemons with managing these different networks. For many reasons, not the least of which was the massive funding dedicated to the task by the U.S. Department of Defense, it was ARPANET’s version of packet switching that established the dominant approach to internetworking.

Where the previous chapter introduced the concepts of networks and control, this chapter uncovers the origins of daemons and their flow control. Without daemons, the internet could not be a network of networks, because their flow control allows many networks to coexist simultaneously. Their origins also foreshadow their enduring influence. Daemons historically have been responsible for managing and optimizing the network of networks. As such, they have grown more clever and influential since their beginnings on IMPs and more capable of managing and optimizing traffic. Their influence over the conditions of transmission continues to enable and control the networks of the internet.

From Local Nets to ARPANET: The Origins of Packet Switching

By the early 1960s, the ambitions for time-sharing computing had grown beyond sharing limited computer resources. It had begun to be seen as a new kind of communication to be shared as widely as possible. Speaking at an early conference imagining the computers of the future, John McCarthy, a key figure involved in time-sharing at MIT, thought that time-sharing computing “may someday be organized as a public utility.”[3] Already at MIT, computing had developed its own culture. Tung-Hui Hu vividly describes the feelings of intimacy and privacy that time-sharing engendered.[4] Programmers on different terminals could chat with one another, share private moments, or steal time on the shared device. Sometimes remote users dialed in to access the mainframe computer from home using a then-cutting-edge device known as a modem. If individual users could connect remotely to their local time-sharing systems, could time-sharing systems be interconnected remotely? More broadly, could time-sharing be a model for a new kind of communication?

J. C. R. Licklider figures significantly in the development of ARPANET (and, by extension, of packet switching) because he directed tremendous resources from the U.S. government. His problem of man–computer symbiosis had attracted the attention of ARPA, at that point a new organization (which would be later named the Defense Advanced Research Projects Agency, or DARPA). Two years prior to the publication of Licklider’s paper on man–computer symbiosis, the Department of Defense founded ARPA to coordinate research and development prompted by fears over the Soviet Union’s launch of the Sputnik 1 satellite in 1957.[5] ARPA sought out Licklider to direct its Command and Control Research project,[6] and he joined as the project’s director in 1962. Licklider redefined ARPA’s interests in command and control as seeking “improved man-computer interaction, in time-sharing and in computer networks.”[7] This change in vision was reflected in a change in nomenclature, and Licklider renamed the project as “the Information Processing Techniques Office,” IPTO.

Many of ARPANET’s original engineers and developers cite Licklider as a visionary who set a path toward a global computer network during his first tenure as director, from 1962 to 1964.[8] Licklider’s optimism about computers stood in stark contrast to the times. Colleagues like Norbert Wiener became more pessimistic about computing over time,[9] while students burned computer punch cards in protest of the Vietnam War.[10] However, Licklider’s optimism attracted some of the best minds in computing. IPTO’s researchers came from the Stanford Research Institute, the University of California, Berkeley, the University of California, Los Angeles, the Carnegie Institute of Technology, Information International Inc., Thompson-Ramo-Wooldridge, and MIT.[11] The people at these centers had “diverse backgrounds, philosophies, and technical approaches from the fields of computer science, communication theory, operations research and others.”[12] These researchers explored numerous applications of man–computer symbiosis, including time-sharing, computer graphics, new interfaces, and computer networking.

At IPTO, packet switching (before it was called that) was codified in four key documents: a memo from 1963, a report from 1966, a paper from 1967, and a final Request for Quotations (RFQ) in 1968. To chart the evolution of packet switching at IPTO, the following section reviews these documents and discusses the important work done by Davies. Taken together, his efforts and IPTO documents reveal the importance of internet daemons to the predecessor of the internet, ARPANET.

Intergalactic, Planetary: From Symbiosis to Networking

The link between symbiosis and a research project about computer networking was explicitly established in a memo by Licklider. Given the humorous title “Memorandum for Members and Affiliates of the Intergalactic Computer Network,” the memo circulated to members of IPTO in April 1963.[13] In the memo, Licklider seems to be thinking out loud about the benefits of interconnected computer systems. (Literally out loud; in its style, the memo seems to be hurriedly dictated before a plane trip.) The memo signals Licklider’s shift in institutional research away from command and control toward researchers interested in “some way connected with advancement of the art or technology of information processing,”[14] hence the subsequent name change to the IPTO. The memo implicitly draws on his “Man–Computer Symbiosis” paper. As discussed earlier, symbiosis was a communication problem. In the 1963 memo, Licklider extends this communication problem from a symbiosis between a human and a computer to a group activity. Indeed, the “Intergalactic Computer Network” in the memo can be read as researchers interested in computer networking, as well as the technical design of a system to connect programs and data stored on remote computer systems or what he might later call “thinking centers.”

While Licklider had a vision for intergalactic networks of humans and machines engaged in what he might later call “on-line interactive debugging,” the memo focused principally on the design challenge to enable work through interconnected computer systems. How could data shared between computers be kept up to date? How could data encoded in one system be read by another? Licklider sought a balance between local autonomy and collective intelligence, using computers to accommodate for regional differences. As he explains,

Is it not desirable, or even necessary for all the centers to agree upon some language or, at least, upon some conventions for asking such questions as “What language do you speak?” At this extreme, the problem is essentially the one discussed by science fiction writers: “how do you get communications started among totally uncorrelated ‘sapient’ beings’?”[15]

In retrospect, Licklider was discussing the question of internetworking protocols, in addition to reiterating the similarities among humans, machines, and other alien intelligences. This process of choosing a language eventually evolved into a formal research program for networking standards and protocols to allow communication between different software systems. Many of these network communication problems emerged out of time-sharing, and Licklider wondered, “is the network control language the same thing as the time-sharing control language?” This question framed the development of ARPANET as an outgrowth of time-sharing.

Computer communication occupied subsequent directors of ARPANET who took research of packet switching from a speculative memo to a request for computer equipment. Licklider was a “visionary . . . not a networking technologist, so the challenge was to finally implement such ideas,” according to Leonard Kleinrock, another key figure in the development of the internet.[16] Their motivations were much less otherworldly compared to Licklider’s, though no less ambitious. And in 1965, IPTO commissioned a report on interconnecting time-sharing computer systems.

1966: Lawrence Roberts and Early Computer Communication

The report was written by Thomas Marill from the Computer Corporation of America and Lawrence Roberts of the Lincoln Laboratory. Submitted in 1966, the report was entitled “A Cooperative Network of Time-Sharing Computers.” It began by stressing the need for the computer networking research community to address fragmentation issues brought about by an increasing number of computer systems and programming languages. The report’s authors then turned to the software and hardware issues involved in connecting computer systems. The report “envision[ed] the possibility of the various time-shared computers communicating directly with one another, as well as with their users, so as to cooperate on the solution of problems.”[17] Its findings drew on experimental research linking a TX-2 computer at Lincoln Laboratory in Lexington, Massachusetts, with a Q-32 computer at the System Development Corporation in Santa Monica, California.

The hardware issue was simple: there wasn’t any. Or, to put it another way, computer communication infrastructure had not yet been formalized. In their paper, Marill and Roberts actively debated infrastructural matters, especially the lines to connect computers. The experimental link between the TX-2 computer and the Q-32 computer sent messages on leased telephone lines. Messages and commands shared the same line. Future infrastructure did not have to be that way. Marill and Roberts wondered whether it would be more efficient to send commands and data on different lines. They also debated adding computers to the infrastructure itself—a move that foreshadowed the design of ARPANET. They wrote: “It may develop that small time-shared computers will be found to be efficiently utilized when employed only as communication equipment for relaying the users’ requests to some larger remote machine on which the substantive work is done.”[18]

As much as the report questioned the materials of a computer network, it ignored or abstracted the telephone system. The report set a precedent of abstraction—later known as layering—in which certain infrastructural functions can be taken for granted (not unlike the women involved in the research at the time). Telephone switching received no mention, even though it had begun to involve computers as well. Bell had installed the Electronic Switching System No. 1, a computer for telecommunications switching, in 1965.[19] In part, these switches ran a secure telephone system for the U.S. military designed by AT&T and known as AUTOVON.[20] The military referred to AUTOVON as a polygrid: a network of densely linked sites that operationalized a Cold War strategy of distribution to ensure survivability.[21] AUTOVON distributed traffic among these hardened sites to ensure the survival of communication after a nuclear attack. (Paul Baran, the other inventor of packet switching, developed his own model of distributed communication in reference to AUTOVON.)

The report showed more of an interest in software than in hardware. Much of the report discussed the operation of the message protocol that allowed the Q-32 and the TX-2 to communicate directly. The report defined their message protocol as “a uniform agreed-upon manner of exchanging messages between two computers in the network.”[22] The protocol established a connection between computers in order for messages to be exchanged. The protocol did not specify the contents of messages, but instead described the command data that preceded and followed every message, which was included to help each computer transmit data and interpret the message. The report referred to this initial data as the “header,” a term that was maintained in the transition to packet switching. Headers were sent before the message to indicate whether the forthcoming message contained data for the user or the monitor (the TX-2 had an experimental graphic interface). An ETX command indicated the end of a message to which the receiver could send an ACK to acknowledge receipt and request the next message.

1967: Proposing an ARPANET

The next iteration of ARPANET appeared in a paper in 1967 that introduced daemons into the computer infrastructure. The paper was the product of much collaboration coordinated by Roberts, who joined IPTO at the end of 1966 to direct the office’s research into networking. Roberts started what became the Networking Working Group in 1967. The group included researchers from the RAND Corporation (short for Research ANd Development), from the University of California institutions in Los Angeles and Santa Barbara and from the University of Utah. Group discussions led to a paper entitled “Multiple Computer Networks and Inter-computer Communication,” presented at the ACM Operating Systems Symposium in Gatlinburg in October 1967. Presented at the Mountain View Hotel nestled in the Smoky Mountains, the document presented ideas developed at a meeting of IPTO’s principal investigators earlier that year and in subsequent breakout groups of researchers interested in networking.[23]

In spite of the elevation of the conference location, the paper was markedly grounded. It provided a rough outline of a network design oriented to the computer research community (in contrast to the intergalactic ideals of Licklider), and it proposed a system that allowed “many computers to communicate with each other.”[24] The system’s benefits included facilitating remote access to specialized hardware and software, sharing programs and data, and limited interactive messaging. The paper anticipated interconnecting thirty-five computers across sixteen locations, creating an infrastructure from the social network of IPTO. These computers connected on leased telephone lines—the lone discussion of common carriage in the paper.

The paper made a decisive recommendation to use computers as the building blocks of the communication infrastructure. In doing so, IPTO ushered daemons into the network. The paper’s authors called it an Interface Message Processor (IMP), a delightfully supernatural sounding name[25] suggested by Wesley Clark at the ARPA primary investigator meeting in May 1967.[26] IMPs were responsible for “dial up, error checking, retransmission, routing and verification.”[27] IMPs embedded computers in infrastructure. Kleinrock, IPTO researcher and expert in queuing theory, later wrote that the benefit of IMPs is that “[t]he ability to introduce new programs, new functions, new topologies, new nodes, etc., are all enhanced by the programmable features of a clever communications processor/multiplexor at the software node.”[28] Cleverness, in short, meant that IMP computers were programmable, capable of obeying set instructions.[29]

The report categorized the hypothetical ARPANET as a “store-and-forward” network, a term that situated ARPA in the history of telecommunications alongside the telegraph. Store-and-forward systems use a series of interconnected nodes to send and receive discrete messages. Each node stores the message and forwards it to a node closer to the final destination, similar to other computer data networks at the time. The ARPANET was proposed as a series of interconnected IMPs that could send, receive, and route data. This meant the network was not necessarily real-time, as the hops between IMPs introduced some delay in a message getting passed to its destination. Real-time applications might have required the IMPs to maintain the line, but the report does not elaborate on this application. IMPs forwarded what the report called message blocks. A message’s block header included its origin and the destination so daemons could effectively route the message. IMPs communicated intermittently (not unlike batch computing). The report did “not specify the internal form” of a message block.[30] Instead, the message block could contain any sort of data.

While the paper established the need for computers in the infrastructure, their job needed more clarification. IMPs’ function came into focus during a 1967 ACM symposium in Teddington, England, when Roberts met NPL’s Roger Scantlebury, who was attending to present research on computer networks at NPL led by Davies.[31]

Donald Davies and Nonsynchronous Communication

The fourth key document, the 1968 RFQ that represented the first step toward actually building the ARPANET, cannot be understood without defining the influence of Davies and his work on what is now called “packet switching.” His interest in computers began at NPL, where he worked with Alan Turing. NPL promoted him to technical manager of the Advanced Computer Techniques Project in 1965.[32] That same year, he visited the United States, in part to investigate time-sharing projects at Project MAC and other computer centers at Dartmouth College, the RAND Corporation, and General Electric.[33] He was sufficiently enthused by time-sharing to begin thinking seriously about undertaking it at NPL.[34] The following year, he gave a talk on March 18 based on a report to NPL entitled “Proposal for a Digital Communication Network,” mentioned in this chapter’s introduction. This report outlined the ideas that later became the paper presented in Gatlinburg in 1967.

The “Proposal for a Digital Communication Network” began with the ambitious goal of creating a “new kind of national communication network which would complement the existing telephone and telegraph networks.”[35] The core of the proposal was a call for “a major organizational change.”[36] Davies proposed a digital communication infrastructure that supported multiple kinds of computer networks through innovation in its flow control. Davies recognized that a great number of applications of computers already existed and that the number would only increase in the future. While telephone networks could be provisioned with modems to allow digital communication, these analog systems created impediments to the reliability of digital communication. He proposed creating a communication infrastructure that could support these diverse networks. As he and his colleagues later wrote, “the difficulty in such an approach is to develop a system design general enough to deal with the wide range of users’ requirements, including those that may arise in the future.”[37]

Davies’s proposed system supported diverse and multiple applications through an approach that he called “nonsynchronous communication.” The theory’s key innovation was to avoid a general synchronization of the infrastructure. While Licklider and others had also faced the problem of connecting multiple durations, they focused on specific synchronization as a solution (e.g., time-sharing or batch computing). Davies avoided synchronization altogether. His hypothetical digital infrastructure differed from the telephone and telegraph networks designed for “synchronous transmission” and “design[ed] for human use and speed.”[38] A computer network, Davies observed, did not have only human users; it had “two very different kinds of terminals attached to this network; human users’ consoles or enquiry stations working at very slow speed and real time computers working at high speeds.”[39] Unlike Semi-Automatic Ground Environment (SAGE) or Project MAC, the proposed system facilitated “non-synchronous transmission, which is a consequence of connecting terminals that work at different data rates.”[40] Davies recognized that the infrastructure itself should not decide how to synchronize its users, but rather it should create the capacity for multiple kinds of synchronization at once.

Davies drew on the example of time-sharing systems, particularly Project MAC, to delineate the core functions of nonsynchronous communication. He described in detail the time allocation on a multiaccess computer. The project’s CTSS operating system divvied up limited processor time through what Project MAC likely called a “scheduling daemon.” The daemon divided jobs into segments and allocated processor time to these smaller segments.[41] Like CTSS, Davies proposed breaking messages into smaller units labeled packets. Where time-sharing systems like Project MAC broke its users’ jobs into segments, Davies proposed segmenting messages into what he called packets. Packets made hosting multiple networks on the same infrastructure easier. The infrastructure dealt with smaller requests within its capacity and responded quickly enough to mimic other networks from real-time, multiaccess, online, or batch-processing networks.

Davies also described packet communication as a store-and-forward system. Similar to ARPANET’s design, his nonsynchronous communication system was a series of interconnected node computers. Davies encouraged setting up the system to be over-connected so that packets could travel on different routes in case one node became oversaturated. These nodes exchanged packets in a manner similar to that in which Roberts envisioned message blocks in ARPANET, but with a key difference: their smaller size greatly altered the system’s operation. These short units required much more activity and greater control in the network, as the node computers had to constantly deal with packets.

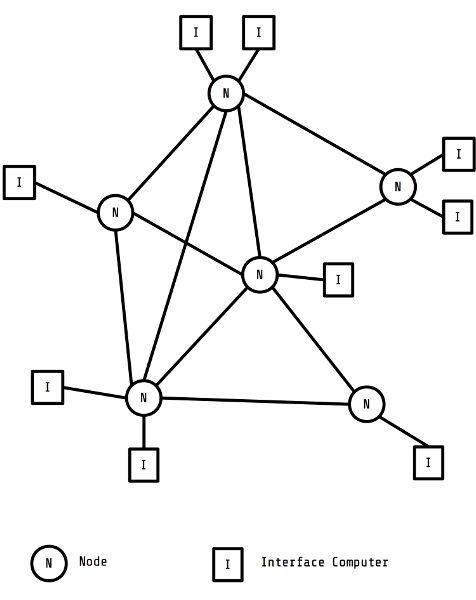

Figure 2. Donald Davies’s diagram of a digital communication system (reproduction).

At the Gatlinburg ACM conference in 1967, Scantlebury presented an updated version of packet communication entitled “A Digital Communication Network for Computers Giving Rapid Response at Remote Terminals.” The report characterized the new digital communication system as a “common carrier” for public, commercial, and scientific computing applications. In keeping with the general purpose of a common carrier network, the report also described three types of networking: “man-computer, computer-computer and computer-machine.”[42] Humans communicated among each other or with computers.[43] Computers also communicated with one another. Nonsynchronous communication modulated the conditions of transmission to accommodate these different human and machine applications. The proposal by Davies and his colleagues thus expanded the concept of “common carriage” to mean a communication system capable of accommodating humans and machines alike.

The paper further refined the role of computers in the infrastructure. The report’s authors described two types of computers in a diagram of the proposed system, as seen in Figure 2. One computer called an “interface” acted as the single conduit for a local area network to access the system. Interfaces connected to other computers called “nodes” that coordinated the transmission of packets to their destinations. Nodes used an “adaptive routing technique” to transmit packets between each other. The report cited this technique as a form of hot-potato routing developed by Paul Baran (the other inventor of packet switching). Adaptive routing involved daemons maintaining a map (or table) of the network and passing off a packet to the closest node as quickly as possible (much like the children’s game “hot potato”). Eventually, by passing the packet from node to node, the daemons routed it to the right location.

Davies left the work of optimization to the node computers, where “each node takes cognizance of its immediate locality.” This “simple routing and control policy” enabled the “high data handling rate” in their proposal.[44] Davies was neither the first nor the last to envision network optimization through decentralized nodes. At the same conference, Roberts introduced IMPs as a similar computer embedded in the infrastructure. Both papers avoided central control (no doubt cause for conversation after the panel). Instead, the proper functioning of the infrastructure was left up to the IMPs (or nodes) to determine using their localized knowledge, creating a distributed pattern. Independent IMPs acting collectively led to an efficient network. Davies knew this to be true only through simulation: he modeled and ran a computer experiment to calculate the optimal design of his node computers and their processing power.

Davies’s distributed approach stood as a novel optimization at the time. Other calculations of the optimal state might have been the product of linear programming or game theory.[45] Recall Licklider’s frustration with batch computing discussed in chapter 1. Waiting for an optimal decision from a batch computer meant that “the battle would be over before the second step in its planning was begun.”[46] Researchers at the RAND Corporation turned to computer simulation as a means to calculate the behavior of complex systems as they began to notice the limits of calculating optimal states in game theory.[47] Where these examples used computers to simulate reality, IMPs became a computer simulation made real, knowable only in its distributed operation. A turn to distributed intelligence was also novel at the time. Even popular culture had its versions of a central computer knowing the right answer. In 1966, Robert Heinlein’s The Moon Is a Harsh Mistress featured a central computer known as HOLMES IV that plots a lunar uprising along with the hero of the book. Billion Dollar Brain, a film from 1967, featured a brilliant computer helping to outthink a communist plot. Even the computer villain HAL 9000 from Stanley Kubrick’s 1968 movie 2001 was a central processor, capable of being pulled apart.

Packet communication, by contrast, distributed intelligence among daemons who constantly ferried packets between the infrastructure’s nodes. These node computers gave new meaning to the nascent idea of an IMP. At least by all accounts, the work of Davies informed ARPANET’s design through various late night conversations. Scantlebury introduced Roberts to complementary research for ARPANET, such as Baran’s work at RAND, and to NPL’s approach to networking. All these ideas informed the final document that led to the construction of the first IMP.

1968: Daemonizing the Infrastructure

Roberts submitted the specifications for ARPANET’s guidelines in June 1968, and they were sent out as an RFQ the next month.[48] The RFQ solicited major technology firms for bids. Proposals came from familiar companies like Bolt, Beranek, and Newman Inc. (BBN), including a joint bid between Digital Equipment Corporation and the Computer Corporation of America (the same firm that helped author the 1966 report) and a bid from Raytheon.[49] Notably, the RFQ focused on the design and operation of the IMP. Bidders had to propose a design for the IMP and then program and install it at four nodes in less than a year. In the following section, I will trace the connections between the RFQ and its lingering optimality problems.

The IMP, as mentioned in the 1967 report, acted as the intermediary between a host computer (or a local network) and the wider ARPANET. The IMP functioned as a distributed networking infrastructure needed to send and receive messages between the ARPANET sites. The RFQ described the IMP’s tasks as follows: “(1) Breaking messages into packets, (2) Management of message buffers, (3) Routing of messages, (4) Generation, analysis, and alteration of formatted messages, (5) Coordination of activities with other IMPs, (6) Coordination of activities with its HOST, (7) Measurement of network parameters and functions, and (8) Detection and disposition of faults.”[50] Two of its functions deserve a closer look: buffering and routing. Together, they define the important modulations of flow control.

“Buffering” referred to how IMPs stored and forwarded packets. IMPs had to store packets in their local memory for brief periods of time before sending them to the next IMP, a process called buffering. Limited resources, especially local memory, meant that inefficient buffering could lead to congestion and error. IMPs overloaded or encountered delays that required them to pause packet transmission. Buffering allowed the IMP to control data flows, holding a packet in the buffer to be sent later due to congestion. IMPs stored a copy of a packet in case it needed to be resent due to error. IMPs also signaled each other if, for example, they needed another IMP to stop transmitting because it was congesting the network (also known as “quenching”). The report did not specify the optimal way for an IMP to manage its queue, but it did situate the problem in queuing theory.

Theories to manage queues developed out of telephone engineering, mathematics, and operations research as an attempt to find optimal strategies to manage waiting lines in communication systems (though they had numerous applications outside the field). At the time of the RFQ, scholars had just begun to develop the theory beyond the single channel of Claude Shannon’s information theory and toward modeling queues in multiple-node, multichannel networks.[51] Kleinrock wrote his dissertation on a mathematical theory for effective queuing to prevent congestion and ensure efficient resource allocation.[52] In it, he suggested that store-and-forward networks had stochastic flows or messages sent at random intervals. Stochastic flows had to be modeled through probability. Based on this model, he contended that an optimal network assigned priority to the shorter messages in queues.[53] His ideas anticipate the kinds of queuing algorithms later developed and implemented on ARPANET in the 1970s and in the contemporary internet.

Queuing also related to resource-allocation issues in time-sharing systems. In his report on Project MAC, Davies noted that the system had four categories of users. Each group had a certain number of lines connected to the machine that regulated system access. Davies explained:

Two lines are reserved for the top management, six for the systems programmers, two for the people using the special display and [twenty] for the rest. When one group of users’ lines is full they can get a stand-by line in another group, if one is free, but may be automatically logged out if that line is wanted by a user in its group.[54]

In other words, Project MAC had begun to design some rudimentary queuing logics into the infrastructure. The scheduling daemon cycled between users connected to the machine, which led to questions about an optimal scheduling system. Should it cycle through in round-robin fashion, as noted by Davies, or use a more complex system?

While these debates continued long after the contract was awarded, the queue illustrates one modulation of flow control in that it specifies which packets have priority over other packets. The RFQ’s specifications delegated flow control to the IMPs to solve these queuing issues. Kleinrock later described flow control as a process whereby programs could “throttle the flow of traffic entering (and leaving) the net in a way which protects the network and the data sources from each other while at the same time maintaining a smooth flow of data in an efficient fashion.”[55] These flow control programs needed to assign priority to packets arriving simultaneously. Which would be transmitted first? And which packet had to wait in the buffer?

In addition to questions of priority, IMPs had to choose the best route to send a packet to reach its destination. Routing dilemmas and strategies partially emerged out of the literature of telephone engineering, often found in the pages of the Bell Labs Technical Journal, as part of the move to automated long-distance telephone switching. Operations researchers also had an interest in figuring out the optimal route to travel when faced with multiple paths (often called the traveling salesman problem).[56] Telephone engineers sought to design both the optimal topology of a network and the best algorithms for routing long-distance calls. A central problem they faced concerned avoiding blocked trunks (or, in IPTO language, congested nodes), and they derived solutions from early applications of probability theory and graph theory. This literature partially framed the challenge faced by the IMP developers. One of the key architects of the IMP, Robert Kahn of BBN, and later IPTO, worked at Bell Labs under the supervision of Roger I. Wilkinson, who wrote an influential article relating to routing.[57] Wilkinson proposed, for example, that automatic switching should be able to calculate multiple possible routes for a call to minimize delay and reduce congestion in the telephone system.

ARPANET routing provided additional challenges to IMPs. Davies and others had suggested that the network’s topology should be over-connected, and the RFQ stated: “Each IMP shall be connected to several other IMPs by full duplex [fifty] kbps common carrier communication links creating a strongly interconnected net.”[58] This meant that IMPs had options and had to keep track of them to pick the best route. IMPs crashed, became congested, and locked up (for many reasons not originally anticipated by IPTO).[59] As a result, IMPs had to communicate with other IMPs (responsibility 6 from the RFQ), constantly measure performance (responsibility 7), and detect failures (responsibility 8) to maintain an operational model of the network topology. IMPs kept all this information in what became known as a routing table. Routing daemons read these tables to make decisions about where to send a packet. A routing daemon, for example, might follow Baran’s hot-potato routing strategy by referring to the routing table, finding the IMP closest to the destination, and passing the packet off to the selected IMP as quickly as possible.

Routing acts as another modulation of flow control, wherein daemons prioritize connections and influence the path of a packet. Daemons then directly overlap with the spatial configurations of the internet’s physical infrastructure. While wires and pipes provide possible connections—a plane of immanence—for networks, daemons enact these connections with significant consequences for the temporal conditions of transmission. Does a daemon pick a route that causes greater delay? How does a daemon know the best path? Through routing, daemons wielded another important influence over the conditions of transmission.

Routing and queuing became two important capacities of ARPANET’s daemons. A packet communication network allows daemons to be extremely particular about their influence, as will be seen in chapter 4. While they could simultaneously accommodate distinct temporalities, their flow control could also manipulate the response time of certain networks, adding delay or giving priority. New ways to understand and interpret packets augmented daemons’ ability to vary transmission conditions. How daemons should use their newfound powers constituted an ongoing debate about the internet’s design.

The RFQ gave three ranked criteria for network performance: message delay, reliability, and capacity. Any proposal would be judged on whether it could ensure that “the average message delay should be less than [half a second] for a fully loaded network,” that it had a low probability of losing or misrouting a message, and least importantly, that the network had a defined maximum bandwidth per IMP.[60] Delay, the RFQ suggested, could be caused by how the IMP processed and queued packets as well as by the medium of communication. The emphasis on delay, a factor known at the time to cause user frustration (as will be discussed in chapter 5), can be seen as a guideline that the network should prioritize interaction and communication. Perhaps in contrast to the need for reliability in a telephone network, ARPANET’s daemons should be more concerned with lessening delay and, by extension, with the communication between host computers. The RFQ did not discuss matters of prioritization or how to manage multiple network at once—questions that lingered.

While the RFQ exhibited an overall preference for technical language, the lack of a vision for the network in the description of the network model is striking compared to the roots of ARPANET. Gone are the ideals of man–computer symbiosis or an intergalactic network. The RFQ did not even mention the concept of multimedia networking suggested by Davies. The lack of details posed a lingering question for daemons to solve: what should a network of networks be?

The Optimality Problem

The RFQ provided little direction for the definition of an optimal communication system. The report exemplifies a common issue found in this history: engineers built new systems of control and management without designing ways for these systems to be managed or governed. Robert Frank, Kahn, and Kleinrock, who were all researchers deeply involved in the design of the ARPANET and the IMP, later reflected that “there is no generally accepted definition of an ‘optimal’ network or even of a ‘good’ network,” a statement that could be viewed as an admission of a constitutive issue in the ARPANET.[61] The word “optimal” is telling. As mentioned in the introduction, optimization originates in mathematics and operations research. An optimal solution is the best one among available options. Nonsynchronous communication created the conditions for the internet to be many kinds of networks without necessarily stipulating what an optimal network is. As a result, daemons are always trying to optimize but do not always agree on how.

Even without a clear sense of the optimal, ARPANET included two distinct processes of optimization. On one side, BBN left the management of flow control to the IMPs themselves. BBN’s response to the RFQ eschewed centralized control in favor of letting autonomous IMPs handle the work. As they explained:

Our experience convinced us that it was wrong to platform for an initial network that permitted a sizable degree of external and remote control of IMPs. Consequently, as one important feature of our design, we have planned a network composed of highly autonomous IMPs. Once the network is demonstrated to be successful, then remote control can be added, slowly and carefully.[62]

In practical terms, optimization occurred through actively autonomous IMPs collectively enabling flow control. There was no central control, only the work of daemons coordinating with each other. This process of optimization continues to this day, with the internet running through the distributed work of millions of pieces of hardware.

As the infrastructure grew, ARPANET augmented IMPs’ optimization with a second computer simulation. The Network Measurement Center (NMC) at UCLA and the Network Control Center (NCC) at BBN both monitored the performance of IMPs, analyzing the system for congestion and lock-up and then diagnosing the cause. Both centers’ observations led to iterative changes in the design of the IMPs and the overall operation of the system. Simultaneously, programs run at BBN and another contractor, the Network Analysis Corporation (NAC), ran simulations of ARPANET to understand and improve its performance and forecast its growth.[63] Between the simulations and the work of ARPANET, the specific nature of network optimization became apparent. Semiannual reports from NAC provide a concise statement of the logic of optimization used in computer simulation. IPTO first contracted NAC’s services in 1969 to help plan its expansion beyond the first four nodes, and NAC submitted six semiannual reports to IPTO from 1970 to 1972.[64]

Like so many of the contractors at IPTO, NAC’s President Howard Frank was a familiar member of the computer science research community, training and using many techniques of the cyborg sciences. He earned his PhD from Northwestern University, where his doctoral work applied network theory to computer communication systems. His research resembled Kleinrock’s so greatly that a colleague mistook the library’s copy of Kleinrock’s published book for Frank’s unfinished dissertation. (Upon hearing the news, Frank rushed to the library to find the book, feeling relief that their approaches differed enough that his dissertation still made a contribution.) After graduation, Frank worked with Kleinrock at the White House in an experimental group known as the Systems Analysis Center. Their use of network theory to analyze offshore natural gas pipeline systems saved an estimated $100 million.[65] After Richard Nixon’s election, Frank focused on using the same network analysis for other applications like modeling ARPANET.

Unlike the IMPs, NAC had a better sense of the optimal. Building on research in pipeline design, graph theory, and the subfield of network theory,[66] the first of the six reports described a methodology and a recommendation for an optimal topology for ARPANET. This was primarily an infrastructure question: calculating the optimal number and location of nodes, the capacity of the telephone lines that linked them together, and the overall capacity of the system. The report concisely described this problem at the start:

Each network to be designed must satisfy a number of constraints. It must be reliable, it must be able to accommodate variations in traffic flow without significant degradation in performance, and it must be capable of efficient expansion when new nodes and links are added at a later date. Each design must have an average response time for short messages no greater than 0.2 seconds. The goal of the optimization is to satisfy all of the constraints with the least possible cost per bit of transmitted information.[67]

Topological optimization iteratively calculated the most economic and feasible network design. This process kept static much of the work of IMPs and users, such as fixing the routing calculation, averaging traffic for regular and high-volume nodes,[68] and using the established buffer size of IMPs. Keeping these constant, the optimization program drew a network between nodes, calculated the cost, and then redrew the network. It then compared the two designs, kept the cheaper, feasible network as an input to start the process over again. The program produced a number of different topologies for the ARPANET that Frank analyzed at the end of the report. These designs informed the growth of ARPANET into a twelve-, sixteen-, or eighteen-node infrastructure at a time when it had just four nodes.

The report also recognized the limits of its approach to optimization. Computer power at the time could not calculate an optimal topology beyond twenty nodes using the method of integer programming. As report explained:

Even if a proposed network can be accurately analyzed, the most economical networks which satisfy all of the constraints are not easily found. This is because of the enormous number of combinations of links that can be used to connect a relatively small number of nodes. It is not possible to examine even a small fraction of the possible network topologies that might lead to economical designs. In fact, the direct enumeration of all such configurations for a twenty node network is beyond the capabilities of the most powerful present day computer.[69]

Though advances in computing improved capacity for the calculation, computational capacity set hard limits on the foresight of ARPANET.

Although NAC’s report discussed IMPs’ flow control and network optimization, it did not solve them through computation modeling. Finding the optimal was left to daemons to figure out by themselves. Daemons provided a very different kind of optimization from the services provided by NAC, expressing optimization through their active flow control. It is a small but crucial step from the algorithmic calculations of topological optimization to the active and dynamic calculations of IMPs. ARPANET turned the simulation into an actual experiment. This latter daemonic optimization became a key feature of the ARPANET and later the internet.

Daemonic optimization did not have a clear solution, unlike the economics of NAC. Davies, for his part, continued to advance distributed solutions to network optimization and even suggested much more radical solutions than what became accepted wisdom. In the early 1970s, he proposed that a packet-switching system should always operate with a fixed number of packets. He called this solution an “isarithmic” network.[70] A colleague of Davies at NPL, Wyn L. Price, later explained that the obscure term “isarithmic” was a combination of the Greek terms for for “equal” and “number.”[71] An isarithmic network kept an equal number of packets at all times by limiting the number of total packets at one time. Daemons continuously transmitted the same number of packets, with or without data, to create a constant flow. To send data, daemons switched empty packets for ones containing data. This radical proposal allowed for congestion control “without employing a control center.” Central control, he wrote, “could be effective but has bad features: the monitoring and control data increases the traffic load and the controller is a vulnerable part of the system.”[72] Running a packet-switching system at full throttle, in retrospect, seems wild compared to much of the literature discussing the economics of bandwidth at the time.[73] Rather than save bandwidth, Davies proposed using it all.

The logic of isarithmic computing is twofold: first, it simplifies calculating the optimal using computer simulation and, second, it solves an information problem for distributed node computers. The latter aspect warrants further discussion, given its relationship to daemonic optimization. Davies suggested that empty packets could share information between node computers. Similar to the ways IMPs later shared their tables for adaptive routing, Davies imagined that empty packets enabled IMPs to better coordinate their flow control. Implicitly, his isarithmic proposal acknowledged the challenges of distributed intelligence. Node computers had to be intelligent for their flow control to be effective. This requirement would become a distinct challenge, as we will see when I discuss packet inspection in chapter 4. A considerable amount has been invested into making daemons more aware.

Davies first made his proposal at the second and seemingly last Symposium on Problems in the Optimization of Data Communications Systems, held in 1971. He later turned the paper into an article published in the 1972 special issue of IEEE Transactions on Communications. These venues proved to be an active forum for debate and discussion, but these debates eventually led to Davies’s isarithmic proposal being discarded. The idea, according to Vinton Cerf, “didn’t work out.”[74]

An isarithmic network was just one of many solutions to congestion and the general matter of daemonic intelligence. What was optimal daemonic behavior? How intelligent should daemons be compared to their users? Many of these debates are well known, having been covered as central debates in the history of the internet, and also as ongoing policy issues. Debates, as discussed in chapter 4, centered on how much intelligence to install in the core of the infrastructure, versus leaving it at the ends. Should daemons guarantee delivery or not? Should daemons think of networks more holistically as a circuit or focus just on routing packets? (This is technically a debate known as “virtual circuits” versus “datagrams”). Many of these arguments culminated in a matter of international standards when the Transmission Control Protocol and Internet Protocol (TCP/IP) became the de facto standard instead of the International Telecommunication Union’s X.25.[75]

Researchers on ARPANET collaborated on its design through Requests for Comments (RFCs). Archives of the RFCs contain numerous technical and policy discussions about the design of ARPANET.[76] Topics include visions of network citizenship, whether AT&T would run the system, and if so, whether it would run it as a common carrier, and what communication policy expert Sandra Braman summarizes as debates over “the distinction between the human and the ‘daemon,’ or machinic, user of the network.”[77] How would the network balance the demands of the two? Drawing on an extensive review of the RFCs from 1969 to 1979, Braman explains:

Computer scientists and electrical engineers involved in the design discussion were well aware that the infrastructure they were building was social as well as technical in nature. Although some of that awareness was begrudging inclusion of human needs in design decisions that would otherwise favor the ‘‘daemon,’’ or nonhuman, network users (such as software), at other times there was genuine sensitivity to social concerns such as access, privacy, and fairness in the transmission of messages from network users both large and small.[78]

Braman, in this case, meant daemons in a broad sense beyond the specific internet daemons of this book. Daemons and humans had different needs, and their networks had different tempos. How then could ARPANET strike a balance between the various types of networks running on its infrastructure?

Optimization only became more complex as these debates went on. The flexible ARPA protocols allowed users to utilize the networks for a variety of types of transmissions. By 1971, a version of email had been developed and installed on hosts around ARPANET, allowing humans to communicate with one another. The File Transfer Protocol (FTP), on the other hand, focused on allowing users and daemons to exchange computer files.[79] ARPANET even experimented with voice communications, contracting NAC to conduct a study of its economics in 1970.[80] Each application adapted the ARPA protocols to send different kinds of information, and at different rates. How should daemons accommodate these different demands? Could they? These questions, especially those of fairness in the transmission of messages, only compounded as networks proliferated.

The historical nuances of many of these debates are beyond the scope of this project. My story now accelerates a bit in order to get to today’s internet daemons. The proliferation of internet daemons began after BBN won the bid to create the IMP and delivered the first modified Honeywell DDP-516 minicomputers to Kleinrock at UCLA in September 1969.[81] IMPs gradually divided into a host of different network devices, including modems, hubs, switches, routers, and gateways. The concept of packet switching crystallized over the 1970s. During Robert Taylor’s time as director of the IPTO from 1969 to 1973, both his work and input from researchers like Cerf, Kahn, Kleinrock, and Louis Pouzin elaborated the version of packet switching that defines the modern internet. The next chapter explores this transformation from ARPANET to the internet by focusing on the changing diagrams that informed the design and operation of internet daemons.

Conclusion

The internet is now an infrastructure possessed. Embedded in every router, switch, and hub—almost every part of the internet—is a daemon listening, observing, and communicating. The IMP opened the network for the daemons waiting at its logic gates. Network administrators realized they could program software daemons to carry out menial and repetitive tasks that were often dull but essential to network operation. Daemons soon infested computer networks and enthralled engineers and administrators who made use of their uncanny services. Among the many tasks carried out by daemons, one stands out: flow control.

Flow control is the purposive modulation of transmission that influences the temporalities of networks simultaneously sharing a common infrastructure. Daemons’ flow control modulates transmissioned conditions for certain packets and certain networks. It modulates the resonance between nodes in a network and the overall performance of any network. Greater bandwidth increases synchronization and reduced bandwidth decreases it. A daemon might prioritize internet telephony and demote file sharing simultaneously. Networks might run in concert or fall out of rhythm. A daemonic influence changes the relative performance of a network, making some forms of communication seem obsolete or residual compared to emerging or advanced networks. Daemons’ ability to modulate the conditions of transmission helped the internet remediate prior and contemporaneous networks.

The overall effect of internet daemons creates a metastable system in the network of networks. Networks coexist and daemons manage how they share a common infrastructure. This situation invites comparison to what Sarah Sharma calls a “power-chronography.” In contrast to theories of time-space compression, she writes:

The social fabric is composed of a chronography of power, where individuals’ and social groups’ senses of time and possibility are shaped by a differential economy, limited or expanded by the ways and means that they find themselves in and out of time.[82]

Where Sharma focuses on the temporal relations of global capital, the work of daemons establishes temporal relations between networks. This is another way to restate the issue of network neutrality: it involves the optimal way for networks to share infrastructural resources. The uneven distribution of flow causes networks to be in and out sync. As discussed in chapter 4, these relations are enacted through optimizations, through how daemons modulate their flow control to create metastability between networks.

These optimizations create power imbalances and inequalities. Different networks’ viability relate to each other: a successful network is defined by an unsuccessful one. “There can be no sensation of speed without a difference in speed, without something moving at a different speed,” according to Adrian Mackenzie.[83] Paul Edwards comes closest to explaining the comparative value of temporalities when he discusses the SAGE network built by the U.S. Air Force. SAGE linked radar towers together with control bunkers seeking to operationalize the command and control model of military thought. SAGE was a way to control U.S. air space, but its significance lay in being more responsive than the Soviet system.[84] A better model allowed the United States to react more quickly and more precisely than the Soviets’ command and control regime. That is why, as Charles Acland notes on the influence of Harold Innis: “It is essential to remember that spatial and temporal biases of media technologies were relational. They were not inherent a-historical attributes, but were drawn out as technologies move into dominance, always in reciprocity with other existing and circulating technologies and tendencies.”[85] Flow control, then, has an influence over the relations of networks, over how well they comparatively perform. At the time of ARPANET, optimal flow control was a bounded problem contained in the experimental system. As packet-switching developed elsewhere and these systems stared interconnecting, solutions to flow control expanded, and often different senses of the optimal came into contact.