3

IMPs, OLIVERs, and Gateways

Internetworking before the Internet

BEFORE THE FIRST INTERFACE MESSAGE PROCESSOR (IMP) had been delivered, former directors of the Information Processing Techniques Office (IPTO) J. C. R. Licklider and Robert Taylor were imagining a world filled with daemons and digital communications (to everyone’s benefit, of course). Their optimism abounded in a lighthearted article entitled “The Computer as a Communication Device” for the business magazine Science & Technology for the Technical Men in Management. Published in 1968, the article filled in a vision for the Advanced Research Projects Agency’s ARPANET that was absent from the IMP Request for Quotations (RFQ) discussed in the previous chapter. The pair imagined a future in which accessible, computer-aided communications would lead to online communities of people across the globe sharing research, collaborating, and accessing new services. People would connect through common interest, not common location. Online, people would access new programs to help them perform everyday tasks. Computers would do more than manage flows. Optimism replaced the optimal.

Licklider and Taylor’s article provides a bridge from the design of the ARPANET discussed in the previous chapter to the contemporary internet and its problems of optimization addressed in the next. This transition involves changing technical diagrams for packet switching and new desires around computer networking. Licklider and Taylor foreshadow these trends and their article helps me frame these changes conceptually. First, they wanted new computer programs to improve computer-aided communication. Their imaginative paper helps to explain the very real and technical processes that modified what I refer to as the internet’s “diagram,” as discussed in the Introduction. The changing internet diagram created new components and places for daemons to possess the internet. Second, Licklider and Taylor took inspiration from the prospect of new applications of computer-aided communication. The ARPANET quickly became one of many computer infrastructures. More than merely leading to technical innovations, these infrastructures created new kinds of networks that brought together humans and computers in shared times and spaces. These developments in the internet’s diagram eventually led to the bringing together of different networks to be mediated through one global infrastructure called “the internet.”

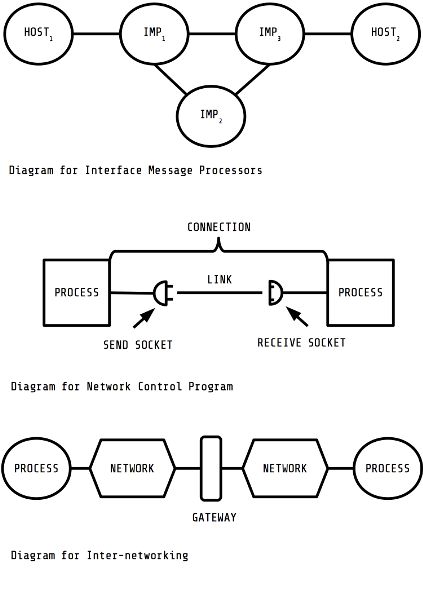

After defining the concept of the diagram in more detail, this chapter traces the changes to the diagram of packet switching as a way to briefly cover the move from ARPANET to the internet. My goal is not to offer a history of computer communication or packet switching; a full history has been told in more depth elsewhere.[1] Rather, my aim is to describe the changing locations and tasks of internet daemons to be discussed further in the next chapter. In doing so, I focus on four iterative diagrams of packet switching. Each involves a change in the diagram and a new kind of daemon. The story begins with the IMP and a largely homogeneous infrastructure before moving to the introduction of the Network Control Program (NCP) at the University of California, Los Angeles. Developed on the hosts to manage their connection to the ARPANET, NCP can be seen as one of the many programs envisioned by Licklider and Taylor. NCP became an essential part of the internet’s diagram by defining its edges. The third diagram moves from a lone packet-switching network to a series of interconnected infrastructures. This move preceded the gradual incorporation of ARPANET as part of a global internet comprised of interconnected infrastructures. This new diagram depended on gateways to connect one infrastructure to another. Figure 3 summarizes these first three diagrams.

The growing number of daemons coincides with many innovative designs for computer networking. The second part of the chapter offers an exemplary discussion of some of the key networks that predate today’s internet. These networks, often running on custom hardware, enabled new synchronizations between humans and machines. Outside the military-industrial complex, hobbyist, pirate, and academic networks popularized digital computing, creating what can be seen as new visions of human–computer interaction. Many of these networks converged into the internet. Their unique transmission requirements and demands on daemons’ flow control have created what I describe, following Fred Turner, as a heterarchy of network demands that daemonic optimizations must resolve.

IMPs and OLIVERs: The Changing Diagrams of the Internet

Licklider and Taylor thought that people would need some help being online. Their imagined assistants might be seen as an elaboration of Licklider’s earlier work on man–computer symbiosis (a name that reminds us of the marginalization of women in computing). Just as the present book does, they found inspiration in the work of Oliver Selfridge:

A very important part of each man’s interaction with his on-line community will be mediated by his OLIVER. . . . An OLIVER is, or will be when there is one, an “on-line interactive vicarious expediter and responder,” a complex of computer programs and data that resides within the network and acts on behalf of its principal, taking care of many minor matters that do not require his personal attention and buffering him from the demanding world. “You are describing a secretary,” you will say. But no! Secretaries will have OLIVERs.[2]

The name honored Selfridge, whom they called the “originator of the concept.”[3] Imagining a future digital communication system using Selfridge’s OLIVERs, their ensuing discussion might be seen as a speculative kind of daemonic media studies and a first glimpse of the internet to be built.

At first pass, OLIVERs seem to resemble the IMPs discussed in the previous chapter. A “complex of computer programs and data that resides within the network” sounds remarkably like the computers that became part of the ARPANET infrastructure. Yet, Licklider and Taylor distinguished OLIVERs as interactive programs distinct from computers implementing what they called the “switching function” in store-and-forward communication. Though they do not mention IMPs by name, they include a discussion of message processors in communications as a way to describe the feasibility of computer-aided communication and to introduce their OLIVERs. These other computers gestured toward programs that resided nearer to end users, on their desktop computers or connected to them through a digital communication system. IMPs were the less interesting intermediaries connecting humans and OLIVERs.

Conceptually, IMPs and OLIVERs specify changes in the infrastructural diagram of the internet. By using the term “diagram,” I draw on the work of Michel Foucault, Gilles Deleuze, and Félix Guattari, who use the word in varying ways to describe an abstract arrangement of space and power. One example of a diagram is Foucault’s panopticon, which functions as an optical technology used to build prisons and other infrastructures of surveillance. Where Foucault emphasizes the optical nature of the diagram, Deleuze and Guattari prefer the term “abstract machine” to refer to the logics of control that axiomatically construct infrastructures and other systems.[4] As they write, “the diagrammatic or abstract machine does not function to represent, even something real, but rather constructs a real that is yet to come, a new type of reality.”[5]

Alexander Galloway makes an explicit link between the diagram and his concept of protocol. Using Paul Baran’s diagram comparing centralized, decentralized, and distributed network designs, Galloway argues that the distributed network design is “part of a larger shift in social life,” one that involves “a movement away from central bureaucracies and vertical hierarchies toward a broad network of autonomous social actors.”[6] This shift, theorized by Galloway as protocol, functions by distributing in all nodes “certain pre-agreed ‘scientific’ rules of the system.”[7] Galloway gives the example of the Internet Protocol Suite, which I discuss in detail later, as one protocol. The early work of IMPs might be understood protocologically. Each IMP ran the same code, so their individual performances enacted a shared optimality.

Such homogeneity was short-lived on ARPANET. By 1975, ARPANET had different kinds of IMPs. At the same time, ARPANET became one of a few packet-switching systems. Internet daemons then became embedded in more complex infrastructures. As diagrams, like Galloway’s concept of protocol, function as “a proscription for structure,”[8] attention to the changing diagrams of packet switching tracks the abstractions that created locations and functions for daemons to occupy in the ensuing physical infrastructures.

Diagrams figure significantly in the internet’s history. Early design documents, some included in this chapter, sketch out the abstract design for the infrastructure to come. Donald Davies’s diagram of this digital communication system, included in chapter 2, is one example of a diagram that helped construct the ARPANET. Lawrence Roberts included his own diagram to describe the unbuilt ARPANET at the fateful Association for Computing Machines conference in Gatlinburg, Tennessee, in 1967. It depicted ARPANET as time-shared computers connected by IMPs to a common carrier. In this chapter, IMPs and OLIVERs refer to the changing components of these diagrams. Licklider and Taylor could be said to be adding to the ARPANET’s diagram with the OLIVER. They not only imagined new programs for computer communication; they imagined a new diagram, one that included OLIVERs, host computers, and people connected by IMPs.

Diagrams interact with the work of daemons. Variations in the internet’s diagram created spaces and possibilities for daemons to flourish. This spatial diagram, however, does not entirely determine the kinds of daemons that occupy its nodes and edges. The next chapter will focus on the daemons that appeared within the internet’s diagram running on interfaces, switches, and gateways.

The Common IMP

The first diagram comes from the reply by Bolt, Beranek, and Newman Inc. (BBN) to the ARPANET RFQ. BBN’s Report No. 1763, submitted on January 1969, includes a few diagrams of the proposed ARPANET. The diagram depicted at the top of Figure 3 is reproduced from a section discussing transmission conditions. This diagram best captures the key components of the early ARPANET and its operational sense of abstraction. ARPANET transmits data between hosts through a series of interconnected IMPs. Hosts and IMPs are roughly the same size on the diagram. Researchers at IPTO initially had as much interest in the technical work of packet switching as in its applications, if not more, as illustrated by the diagram. What is missing from the diagram is as interesting as what is represented. The diagram abstracts the common carrier lines and the telephone systems that provisioned the interconnections between IMPs. The whole underlying common-carrier infrastructure is missing. These components simply become lines of abstraction, assumed to be present but irrelevant to understanding ARPANET.

The emphasis on hosts and IMPs represented ARPANET’s conceptual design at the time. It abstracted activity into two “subnets”: a user subnet filled with hosts and a communication subnet filled with IMPs. These subnets operated separately from one another: the user subnet ignored the work of the IMPs and focused on connecting sockets, while the communication subnet ignored the business of the host computers. Each subnet also contained its own programs. The communication subnet contained daemons running on IMPs. At the user subnet, programs running on hosts handled interfacing with IMPs. Finally, the two subnets used different data formats as well. The user subnet formatted data as messages, while the communication subnet formatted packets. Nonetheless, the subnets depended on each other. Hosts relied on the IMPs to communicate with each other, and the IMPs depended on hosts for something to do.

Initially a specific piece of hardware, IMPs gradually became an abstract component that served an intermediary function in a packet-switched infrastructure. After delivering the first IMP to Leonard Kleinrock in 1969, the BBN team developed at least four different types of IMP, in part because:

[It had] aspirations for expanding upon and exploiting the Arpanet technology beyond the Arpanet, both elsewhere in the government and commercially. Relatively early on, a slightly modified version of the 516 IMP technology was deployed in the US intelligence community.[9]

BBN developed the IMP 316, the IMP 516, the Terminal IMP, and the Pluribus IMP. Without enumerating the differences, it is important to recognize that the abstract IMP component of the diagram allowed multiple iterations. In other words, the IMP soon stood for many functions, all having to do with in-between-ness.

The IMP then became an intermediary node in the internet’s design, specifying an element between hosts or the “ends” of the communication system. Today, a few different kinds of hardware occupy that intermediary position. Hubs and switches are devices that aggregate and forward packets between parts of the infrastructure. They might be seen as a kind of telephone switching board with multiple inputs and outputs. Hubs and switches differ in their methods of packet forwarding. A hub forwards packets to all its connected lines, whereas switches forward the message only to the specific line. Switches function similarly to another kind of hardware device known as a “router.” Routers are designed to learn the topology of a part of the internet and to use that knowledge to send packets toward their destination. Hubs, switches, and routers are a few of the necessary pieces of equipment for packet switching. In addition, a number of other devices known as “middleboxes” exist in this intermediary space. “Middlebox” is a catchall term meant to describe all the intermediary devices that have a purpose other than packet forwarding. As the next chapter discusses, these middleboxes inspect packets and coordinate resources.[10]

A firewall is a good example of a middlebox. It is now an essential part of the internet, but one not necessarily included on the original ARPANET diagram. Firewalls developed as a response to the security risks posed by computer networking. Bill Cheswick and Steven Bellovin, early experts on internet security at Bell Labs, described the problem as “transitive trust.” As they explained, “your computers may be secure, but you may have users who connect from other machines that are less secure.”[11] Security experts needed a way to secure information transmitted from outside threats. The problem became clear after the Morris worm incident on November 2, 1988. Robert Tappan Morris, a computer science student at Cornell University, wrote a program to measure the internet. The worm’s buggy code flooded the internet, overwhelming its infrastructure. Even though Morris was convicted of a crime under the Computer Fraud and Abuse Act, the worm helped to promote technical solutions to transitive trust. Specifically, the worm popularized the usage of firewalls, computers that act as intermediaries between different infrastructures. In a car, a firewall stops engine fires from entering the passenger cabin while still allowing the driver to steer. On networks, firewalls block threats while permitting legitimate traffic to continue. The first firewalls relied on destination and port numbers in the lower layers to identify threats.[12] Digital Equipment Corporation sold the first commercial firewall on June 13, 1991.[13] Firewalls subsequently became a mainstay of any networking infrastructure.

As much as the top diagram in Figure 3 illustrates a packet-switching infrastructure, it misses the activity happening on the host computers. IPTO initially proposed the ARPANET as a way to connect specialized computer infrastructures. Yet, the diagram does not reflect the diversity of the computer systems on the ARPANET, which included computers manufactured by Digital Equipment Corporation and IBM, in addition to different operating systems. This is not to say that ARPANET developers ignored the host computers, but as Janet Abbate argues, users had been ARPANET’s “most neglected element.”[14] Instead, a second diagram adds a new site of activity on the ARPANET.

Network Control Programs and Transmission Control Programs

Although IPTO encouraged other institutions to use its ARPANET, sites had to develop their own local programs to interface with the IMP. This led to a lot of hurried work developing local programs to interface with IMPs.[15] The second diagram in Figure 3 is one output of that work. It is adapted from a paper by C. Stephen Carr, Stephen Crocker, and Vinton Cerf entitled “Host-Host Communication Protocol in the ARPA Network” published in 1970.[16] The paper proposed a new kind of protocol for ARPANET to enable hosts to better communicate with each other in the user subnet. Instead of communicating with different computers, the Host-to-Protocol specified how a host could implement a program that would act as a local interpreter. Carr, Crocker, and Cerf called this new component the “Network Control Program” (NCP).[17]

The NCP represents the first step in the process of separating networks from host computers. It divided the user subnet between host computers and processes running on them. Since many hosts ran time-sharing systems, the NCP needed to target messages for different users and programs running simultaneously. The NCP differentiated messages by including a “socket number” (later called a “port number”) in its header. This number was made up of a host number, a user number, and “another eight-bit number” used to identify separate user processes.[18] NCPs read the socket number to know where to send the message locally. NCPs embedded this socket number and other control data in its message header. When a local IMP reassembled the message from its packets and sent it to the host, the NCP read this header to route the message to the right local socket. Through sockets, a user could establish “his own virtual net consisting of processes he had created”:

This virtual net may span an arbitrary number of HOSTs. It will thus be easy for a user to connect his processes in arbitrary ways, while still permitting him to connect his processes with those in other virtual nets.[19]

This quote foreshadows the proliferation of networks on the internet. Through sockets, daemons distinguished different networks on the shared infrastructure.

The second diagram reflects the growing importance of the end computers in the packet-switching systems. Much like Licklider’s and Taylor’s disinterest in switching computers, the diagram generalizes both the infrastructural connection and the host computer. Neither appears on the diagram. Instead, the diagram depicts processes representing the applications and networks running on the ARPANET. Two lines connect processes in the diagram. The top line refers to the communication subnet. Simultaneously, the link line below illustrates NCPs’ work at the user subnet to connect processes to each other. Neither line depicts the computers, IMPs, and telephone lines that enable this communication. These parts of the ARPANET had faded into infrastructure. The omission of the IMP from the diagram had lasting implications for packet switching. The IMP was subsumed into the infrastructure as an assumed intermediary, while the NCP took on more prominence.

This emphasis on the ends endured in the first internet protocols. By the early 1970s, researchers at IPTO turned from interconnecting time-sharing computers to interconnecting packet-switching infrastructures.[20] The NCP and its new role in creating virtual nets directly informed the development of the internet protocols. In 1974, Cerf and Robert Kahn published an article entitled “A Protocol for Packet Network Intercommunication.” The protocol sought to develop standards and software to ease communication across separate packet-switching infrastructures.

The Transport Control Program was a key part of their protocol: it replaced the NCP and had greater responsibility over transmission conditions. It had to. Cerf and Kahn argued that this program was in the best position to understand and make decisions about transmission. Almost ten years later, the Transport Control Program evolved into the Transmission Control Protocol (TCP), part of the Internet Protocol Suite (TCP/IP). On January 1, 1983, also known as “Flag Day,” it replaced the NCP as the ARPANET standard.

In many ways, TCP was a revolution, flipping the network design on its head. TCP did not just conceptually replace NCP; it displaced the IMP as the head of ARPANET. Interconnected infrastructures could know their own domain, but not necessarily the whole of the internet. Optimal communication required IMPs and other middleboxes to leave the important decisions to the ends. This arrangement was one of a few ways Cerf and Kahn avoided centralization. They rejected, for example, a suggestion by one of the IMP developers at BBN to build a centralized registry of port numbers. Instead, Cerf and Kahn argued, their solution preserved the premise that “interprocess communication should not require centralized control.”[21] These principles were further formalized into what has become known as the End-to-End principle (E2E), discussed in the next chapter.[22] The internet became the outcome of interconnecting packet-switching infrastructures. This metainfrastructure, a network of packet-switching networks, involved another iteration of the internet’s diagram and one last component.

Gateways and an Inter-network

In the same paper that introduced the Transmission Control Program, Cerf and Kahn introduced gateways. Figure 3 includes a third diagram adapted from their 1974 paper. This diagram again depicts the act of transmission, but here a message travels across multiple packet-switching infrastructures. As in the previous diagram, processes, referring to the specific applications running on a computer, are the least abstracted element. They also continue to occupy the important ends of the network. Processes connect to each other across two packet-switching infrastructures depicted as hexagons. As in prior diagrams, the infrastructure has been abstracted and processes assume their messages will be transmitted.

The diagram generalizes packet-switching infrastructure as another component just when ARPANET was becoming just one of many infrastructures of its kind. Many of these infrastructures came online after ARPANET. Donald Davies started working on one at the National Physical Laboratory in 1967. Known as the NPL Data Communication Network, it came online in 1970.[23] Other notable packet-switching infrastructures include the CYCLADES project, built in France starting in 1972 and led by a major figure in packet switching, Louis Pouzin.[24] The CYCLADES project, named after a group of islands in the Aegean Sea, aimed to connect the “isolated islands” of computer networks.[25] Bell Canada, one of the country’s largest telecommunications companies, launched the first Canadian packet-switching service in 1976.[26] Bolt, Beranek and Newman launched a subsidiary in 1972 to build a commercial packet-switching service known as TELENET (whose relationship to ARPANET was not unlike that of SABRE’s to SAGE). By 1973, Roberts had joined as president, and construction began on the commercial infrastructure in 1974.[27] Any of these projects could be represented by the same hexagon.

A new device known as a “gateway” acted as a border between these packet-switching infrastructures, routing packets between them. To enable them to do so, Cerf and Kahn refined an internet address system to allow gateways to locate specific TCP programs across packet-switching infrastructures. Whereas ARPANET needed only to locate a process on a host, this internet had to locate processes on hosts in separate infrastructures. Cerf and Kahn proposed addresses consisting of eight bits to identify the packet-switching infrastructure, followed by sixteen bits to identify a distinct TCP in that infrastructure. Gateways read this address to decide how to forward the message:

As each packet passes through a GATEWAY, the GATEWAY observes the destination network ID to determine how to route the packet. If the destination network is connected to the GATEWAY, the lower 16 bits of the TCP address are used to produce a local TCP address in the destination network. If the destination network is not connected to the GATEWAY, the upper 8 bits are used to select a subsequent GATEWAY.[28]

According to this description, gateways operated on two planes: internally forwarding messages to local TCPs and externally acting as a border between other infrastructures.

The “Fuzzball” was one of the first instances of a gateway and an example of how daemons in the internet developed to occupy these new positions in the diagram. David Mills, the first chair of the Internet Architecture Task Force, developed what became known as the “Fuzzball” to manage communications on the Distributed Communication Network based at the University of Maryland. Mills explained in a reflection on the Fuzzball’s development that it had been in a “state of continuous, reckless evolution” to keep up with changing computer architectures, protocols, and innovations in computer science.[29] The Fuzzball was much like an IMP. It was a set of programs that ran on specialized computer hardware designed to facilitate packet-switching communication. The Fuzzball also implemented multiple versions of packet-switching protocols, including TCP/IP and other digital communication protocols. Since they implemented multiple protocols, Fuzzballs could function as gateways, translating between two versions of packet switching.[30]

The gateway, then, completes a brief description of the components of the internet’s diagram. Starting at the edges of the infrastructure, the diagram includes processes or local programs. These processes connect through intermediary infrastructures filled with switches, hubs, routers, and other middleboxes. In turn, these intermediary infrastructures connect through gateways, a process that is now called “peering.” Together, these three general categories function as an abstract machine creating new internet daemons. Yet, the diagram of the internet has undergone a few noteworthy modifications since the 1974 proposal by Cerf and Kahn.

The Internet

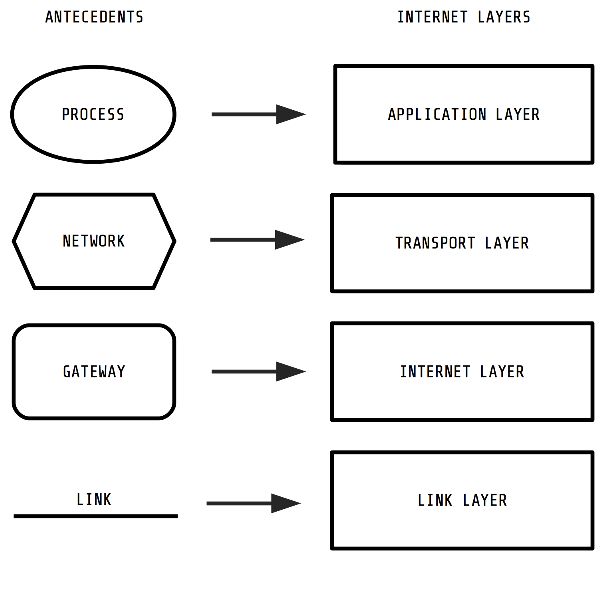

Today’s internet incorporates all these prior diagrams: it includes processes, intermediaries, and gateways. They can be seen to directly inspire the layered diagram commonly used to describe the internet, depicted in Figure 4. Layering is a computer engineering strategy to manage complexity by separating activities into hierarchical layers. Each layer offers “services to higher layers, shielding those layers from the details of how the offered services are actually implemented.”[31] ARPANET’s communication and user subnets were nascent articulations of this layering approach, more as a way to aggregate functions than as a strict implication of layers. The internet’s layers started as the ARPANET’s subnets. NCP and TCP bifurcated the user subnet so the host acted as an intermediary for specific users or applications to connect on the ARPANET. Gateways subsequently positioned ARPANET as part of a global system of interconnected packet-switching infrastructures, gesturing toward an internet layer.

The internet’s diagram has four layers according to version 4 of TCP/IP. From highest to lowest, they are: the application layer, the transport layer, the internet layer, and the link layer. The application layer contains processes or, in today’s terms, applications running on home computers engaged in computer networking. It includes programs for email, the File Transfer Protocol (FTP), and many other “virtual nets” imagined by Cerf and Kahn. The next layer, transport, refers to the location of what became of the Transport Control Program. The transport layer now includes programs using TCP (now the Transmission Control Protocol) and the User Datagram Protocol (UDP). Transport-layer daemons assemble packets and reassemble messages, and they also handle addressing packets using the descendants of sockets or ports. TCP establishes connections between internet nodes, whereas UDP is used more for onetime, unique messages. The internet layer is the domain of intermediaries such as routers, switches, hubs, and gateways. It includes both daemons that forward packets within an infrastructure and daemons that interconnect infrastructures. Finally, the link layer consists of a mix of ARPANET’s transmission media: its modems and leased telephone lines. Daemons on this layer handle the transmission of packets accordingly for each particular medium. Where IMPs dealt with modems and telephone lines, contemporary daemons work with Ethernet, copper, coaxial, and fiber lines, which all require custom-encoded protocols to route information.[32]

Another way to interpret the internet’s diagram is the Internet Protocol (IP) addressing system, which is used to specify any location on the internet. Until IPv6 arrives, every location on the internet has a 32-bit address, usually divided into four groups of three-digit numbers using the Classless Inter-Domain Routing (CIDR) notation, such as 255.255.255.255.[33] IP addresses are the latest iteration of Cerf and Kahn’s network number and TCP identifier discussed in the prior section. The first bits refer to the network number, and the latter bits refer to the local computer. Internet addressing relies on the Internet Assigned Numbers Authority (IANA), which maintains and assigns blocks of IP addresses. IANA allocates these blocks to specific regions, as well as directly to the separately managed parts of the internet known as autonomous systems. As of spring 2018, there were over 60,000 autonomous systems on the internet.[34] IANA distinguishes these different infrastructures through Autonomous Systems Numbers (ASN). Daemons rely on ASNs and IP addresses to ensure that data reaches its destination. A packet might first be sent to the ASN associated with its address, then to the part assigned to its network number, and then finally to its local computer.

Layers and the IP address both express a diagram constructing the internet. This abstraction also informs the development of daemons as components of the diagram. Each layer of the internet fills with daemons, a task now largely carried out by the internet infrastructure industry. The origin of one of the biggest firms, Cisco Systems, is easy to follow from this history. The aforementioned Fuzzball functioned as a gateway because it implemented multiple protocols; it was one outcome of research into multiprotocol routers. Researchers at Stanford University, home of the Stanford Research Institute and a node in the ARPANET, developed their own multiprotocol router to connect various departments to each other. This router became known as the “Stanford Blue Box.” By 1982, it could interconnect ARPANET with Xerox Alto computers using Xerox Network Services, as well as other protocols like CHAOSnet.[35] While accounts differ on how exactly the Blue Box escaped the labs of Stanford, it became the first product sold by Cisco Systems, founded in 1984.[36] Today, Cisco Systems is the largest player in the $41-billion networking-infrastructure industry.[37]

The internet, by its very design, supports different kinds of networks. Interconnecting brought together different ideas of computer-mediated communication and their supporting infrastructures. To understand the newfound importance of the internet, it is worth addressing the diversity of networks that developed contemporaneously with the internet beyond time-sharing and real-time. These networks help illuminate the cultural dimension of computer networks prior to the internet, since they acted as infrastructures that synchronized humans and machines around common times, as well as around common visions of a computer network. The internet later remediated these network assemblages and their network cultures.[38]

A Brief History of Networks

As Licklider and Taylor distinguished OLIVERs from IMPs, they made an interesting comment about communication models. The human mind, according to them, is one communication model, but not the only one. In fact, the mind has some shortcomings. Licklider and Taylor listed a few, including that it has “access only to the information stored in one man’s head” and that it could “be observed and manipulated only by one person.”[39] Human communication imperfectly shared models between people. A computer, they suggested, might be a better way to share models, in part because it was “a dynamic medium in which premises will flow into consequences.”[40] Their article then discusses how a computer might change the nature of work. For example, “at a project meeting held through a computer, you can thumb through the speaker’s primary data without interrupting him to substantiate or explain.”[41] They go on to speculate that computer communication might enable new forms of interaction beyond the boardroom within “on-line interactive communities” formed on a basis “not of common location, but of common interest.”[42] They imagined online communities focused on “investment guidance, tax counseling, selective dissemination of information in your field of specialization, announcement of cultural, sport, and entertainment events that fit your interests, etc.”[43] Certainly, this is a telling list of the concerns of two members of the academic elite, but it’s also an early attempt to imagine a computer network.

Licklider and Taylor’s discussion of online interactive communities and models offers a chance to revisit the idea of computer networks. As discussed in chapter 1, networks are assemblages of humans and machines sharing a common temporality. This temporality includes pasts, presents, and futures. Licklider and Taylor gesture to all three. Online communities would include historical records like computers, afford interactive chats, and allow for computer modeling to predict behavior. These specific applications, however, miss the more evocative aspects of the discussion of models by Licklider and Taylor. Their notion of the shared understandings of communication resembles the discussion of social imaginaries in the information society by the influential political economist of new media Robin Mansell. Where Mansell uses social imaginaries to describe broader visions of the internet as an information commons or as an engine of economic growth, networks might also be seen to include imaginaries shared by their participants. These imaginaries inform networks, especially their senses of time and space.[44]

The next section discusses a few notable examples of this technocultural side of networking. When developed, many of these networks were both new forms of synchronization and novel developments in computer hardware. As the internet became the ubiquitous infrastructure for digital communication, these networks came to run on the common infrastructure. A brief overview of some of these networks elaborates daemons’ challenge today. The internet’s infrastructure technically converged these networks without dramatically rearticulating their technocultural imaginaries. Daemons then face the difficult tasks of accommodating these different networks while finding an optimal way to manage their infrastructures.

Digest Networks

Some of the first networks would be online discussions oriented around interest, just as suggested by Licklider and Taylor. Two important forums arose in the computer science departments of major research universities: USENET in 1979 and BITNET in 1981. USENET grew out of a collaboration between Duke University and the University of North Carolina and worked by mirroring data between computers. It enabled their users to interact in threaded discussions organized by common topics. By keeping these computers in sync, USENET simulated a shared public, even though computers technically connected only in short updates. Hosts existed in the United States, Canada, and Australia. Early USENET systems also connected with other networks through gateways administered in St. Louis, Missouri.[45] Gradually, USENET offered mail services as well as rich discussion of common interests in newsgroups. Another major network, BITNET (“Because It’s Time NETwork”), was developed by researchers at City University of New York and Yale University in 1981. Like USENET, BITNET offered a discussion forum for programmers in the computation departments. In 1984, the BITNET system had 1,306 hosts across the globe, including nodes in Mexico and Canada (where it was called NetNorth).[46] Eventually, when both networks merged with the internet, BITNET became the first email discussion groups and USENET became newsgroups. Both are still common computer networks today.[47] The World Wide Web owes much to this threaded discussion and digest format. Debates about free speech and online participation that animated USENET reverberate in concerns about platform governance today.[48]

Bulletin Board Systems and Amateur Computer Networking

Bulletin Board Systems (BBS) hacked together personal computers and telephone lines to form what computer historian Kevin Driscoll calls the ancestor of social media. The “great snow of Chicago” on January 16, 1978, gave Ward Christensen and Randy Seuss enough time to create the first computer Bulletin Board System. One at a time, users dialed into a modem hacked to boot into a custom program that functioned like a home screen. There, users could select articles to read or post a message for other users.[49] With the arrival of cheap personal modems in 1981,[50] BBSs became an accessible form of computer networking. These ad hoc networks were a way for citizens to start their own local discussion boards and exchanges. By the 1990s, some 60,000 BBSs operated in the United States, and thousands more likely existed internationally.[51]

Culturally, BBSs helped popularize computer communication as a form of virtual community. The Whole Earth ’Lectronic Link (WELL) was perhaps the most famous BBS, and it exemplified a particular strain of optimism that animated computer networks. WELL functioned as a simple online-chat BBS in the San Francisco Bay Area beginning in 1985. Monies from the defunct Whole Earth Catalog started WELL. More than just a BBS, WELL served as the vehicle for the movement of the countercultural ethos of Stewart Brand’s Whole Earth Catalog into the digital age. The network charged a modest fee in comparison to commercial services like CompuServe. Although the site had only a few thousand users, WELL eased the transition from counterculture to cyberculture, according to historian Fred Turner. Countercultural groups like the “new communalists” embraced computers as a means of self-actualization and independence. These ideas, inspired by experiences on WELL, found their way into the trend-setting magazine of early internet culture, Wired.[52]

Many of the key expressions of the social value of computer networks came from WELL. WELL and computer networks in general came to be seen by members of the board as a transformational social force. Indeed, the founders of the leading internet rights organization, the Electronic Frontier Foundation (EFF), met on WELL. Mitch Kapor, one of those founders, states: “When what you’re moving is information, instead of physical substances, then you can play by a different set of rules. We’re evolving those rules now! Hopefully you can have a much more decentralized system, and one in which there’s more competition in the marketplace.”[53] Parts of WELL exemplified a new mixture of free-market rhetoric and decentralized computing, an approach known as the “Californian ideology.”[54] WELL also inspired Howard Rheingold to coin the term “virtual community,” which he defines as “social aggregations that emerge from the Net when enough people carry on those public discussions long enough, with sufficient human feeling, to form webs of personal relationships in cyberspace.”[55] The term helped frame the internet to the public as a friendly place for people to connect.[56]

BBS culture also inspired antecedents of Peer-to-Peer (P2P) file-sharing and computer pirate cultures.[57] The counterculture of Stewart Brand, the Merry Pranksters, and the Yippies inspired generations of phone and computer hackers who viewed exploiting the phone system as a form of political dissent.[58] These groups were important pioneers in the development of early computing and computer networks. Many of the early phone hackers, or “phreakers,” started BBSs to share their exploits and techniques. These groups often freely traded stolen data, and thus attracted the name “pirates”: a term that the music industry once applied to people who made tape duplications of music.[59] “What does an underground board look like?” asked Bruce Sterling in a profile of early pirate boards. He explained:

It isn’t necessarily the conversation—hackers often talk about common board topics, such as hardware, software, sex, science fiction, current events, politics, movies, personal gossip. Underground boards can best be distinguished by their files, or “philes,” pre-composed texts which teach the techniques and ethos of the underground.[60]

Hackers and pirates traded “warez” on these BBSs or bragged about their exploits in text files or digital copies of the hacker publication Phrack. Motivating these activities was a belief that digital systems could copy information indefinitely. Despite their sense of freedom, these BBSs were marked by secrecy and elitism, as only the best pirates could operate in the top networks. Prestige and status had more currency than the value of peers sharing information freely, although iterations of the piracy movement did become more accessible.[61] Today, these pirate networks endure as P2P file-sharing, a popularization of this computer underground.

International and Activist Networks

FIDONET was a worldwide network of BBSs. In 1983, an unemployed computer programmer named Tom Jennings began designing a cheap communication system using computers and telephone lines. Eventually, Jennings’s system evolved into the FIDO BBS, named after the mongrel of a machine hosting the server. Unlike home BBSs, FIDO hosts were designed to share data, and Jennings released its code as free software. Anyone could use the code to create a FIDO BBS, provided it was not for commercial use. The decision to release the code mirrored Jennings’s anarchist politics. By 1990, FIDONET connected ten thousand local nodes in thirty countries. The cheap network attracted activists and development workers in Latin America, Africa, and Russia. Though community networks like FIDONET eventually assimilated with the internet, the spirit of their organizations and cultures endured in creating community-based internet service providers (ISPs) or new online solidarity networks.[62]

These different networks (in both the technical and cultural sense) have distinct temporalities that continue on the internet today. The rhythms of USENET mailing lists continue in internet newsgroups, as well as in the millions of forums for public discussion online, such as Reddit. Hackers still communicate using Internet Relay Chat (IRC) in the many chat rooms across the internet. They also reside on darknets like “The Onion Router” (TOR), putting up with slow loading web pages for the sake of anonymity.[63] They trade files on P2P networks. These large file-sharing networks are a popular form of the old pirate boards. The virtual communities of WELL endure today in social media. Users log on through the web or mobile apps to chat, share, and like others’ posts.

While this short history cannot account for all the computers networks that found their way online, it does outline the problem for daemons slotted into different parts of the internet. They no longer faced the binary challenge of accommodating real-time and time-sharing networks, because now daemons had to accommodate many networks. Conflict loomed. That fact became quickly apparent with the privatization and commercialization of the ARPANET’s successor, the National Science Foundation Network (NSFNET), starting in 1991.

Convergence

While the internet had developed many infrastructures, they eventually collapsed into one using the TCP/IP, the Internet Protocol Suite.[64] The reasons that TCP/IP succeeded over other internetworking protocols are still debated. The factors are neither casual nor simple, but it is helpful to consider the political and economic situation. ARPANET benefited from the tremendous funding of military research during the Cold War.[65] John Day explained the most important factor in a curt but effective summary: “The Internet was a [Department of Defense] project and TCP was paid for by the DoD. This reflects nothing more than the usual realities of inter-agency rivalries in large bureaucracies and that the majority of reviewers were DARPA [or ARPA] contractors.”[66] The political context also helps situate the conditions of interconnection.

In 1991, the U.S. Congress’s High Performance Computing Act, often called the “Gore Bill” (after its author, then-Senator Al Gore Jr.), mandated that different institutions and networks be combined into one infrastructure. Where his father imagined a national highway system, Al Gore Jr. proposed an information superhighway. The ARPANET, or what was then known as the NSFNET, was a leading contender to be the backbone for this unified infrastructure, but its Acceptable Usage Policy presented a problem. The policy banned any commercial applications, even though the National Science Foundation was already outsourcing its network management to commercial providers.[67] After the Gore Bill passed, the NSF began getting pressure from new customers to offer a unified digital communication infrastructure and to liberalize its Acceptable Usage Policy to allow commercial traffic. The NSF gradually privatized its infrastructure. By 1997, five private infrastructure providers were responsible for running the internet’s infrastructure in the United States.[68] The cost of realizing the dream of the Gore Bill was the consolidation of network diversity into a single network largely under the ownership of only a few parties.

Conclusion

The internet formed out of the merger of these past networks and infrastructures, but the lack of a definition of an optimal network in its technical design exacerbated tensions between the different remediated networks. These competing networks have an effect like the many users of WELL, leading to Turner’s “heterarchy”: “multiple, and at times competing value systems, principles of organization, and mechanisms for performance appraisal.”[69] Heterarchy complicates optimization, creating even competing different value systems to optimize for. (Benjamin Peters, in a very different cultural and political context, found that the heterarchy of the Soviet state prevented the formation of its own national computer infrastructure.)[70] This heterarchy endures on the contemporary internet, though it is complicated by the proliferation of networks. Their competing values and priorities are latent in “net neutrality” debates. Networks have competing values for how the internet should be managed. Two of these approaches are discussed in the next chapter.

The lack of a clear way to define the optimal—or even an acknowledgment of the problem—haunts internet daemons. In every clock cycle and switch, daemons have to find a metastability between all these networks. Piracy, security, over-the-top broadcasting, and P2P telephony all have become flashpoints where tensions between the various networks converge on the internet. The internet infrastructure struggles to support all these temporalities. Media conglomerates in both broadcasting and telecommunications have been particularly at odds internally over how to manage these issues. Should their approach to the internet fall within a temporal economy of broadcasting or telecommunications? Should networks police their traffic? The situation has only worsened as ISPs have faced a bandwidth crunch for on-demand movies, streaming video, multiplayer games, and music stores, not to mention the explosion in illegal file sharing. The crunch, in short, requires better management of a scarce resource.

The inception of the internet has led to many conflicts over its optimization, but none perhaps as fierce and as decisive as the one over the emergence of P2P file sharing, a new form of piracy. The successful introduction of file sharing offered a mode of transmission that disrupted the conventional broadcasting temporal economy. At first, the associated media industries tried to sue P2P out of existence, but when that failed, they moved increasingly to flow control to contain threats. Technology, instead of law, could solve the problem of file sharing, an example of a technological fix in which people attempt to solve social problems through technology.[71] These fixes and their daemons will feature in the debates explored in the following chapters.