Introduction

Isabelle f. Peschard and Bas C. van Fraassen

The philosophical essays on modeling and experimenting in this volume were originally presented at three workshops on the experimental side of modeling at San Francisco State University in 2009–2011. The debates that began there continue today, and our hope is that they will make a difference to what the philosophy of science will be. As a guide to this collection the introduction will have two parts: an overview of the individual contributions, followed by an account of the historical and methodological context of these studies.

Overview of the Contributions

In this overview we present the individual essays of the collection with a focus on the relations between them and on how they can be read within a general framework for current studies in this area. Thus, first of all, we do not view experiments simply as a tribunal for producing the data against which models are tested, but rather as themselves designed and shaped in interaction with the modeling process. Both the mediating role of models and the model dependence, as well as theory dependence, of measurement come to the fore. And, conversely, the sequence of changing experimental setups in a scientific inquiry will, in a number of case studies, be seen to make a constructive contribution to the process of modeling and simulating. What will be especially evident and significant is the new conception of the role of data not as a given, but as what needs to be identified as what is to be accounted for. This involves normative and conceptual innovation: the phenomena to be investigated and modeled are not precisely identified beforehand but specified in the course of such an interaction between experimental and modeling activity.

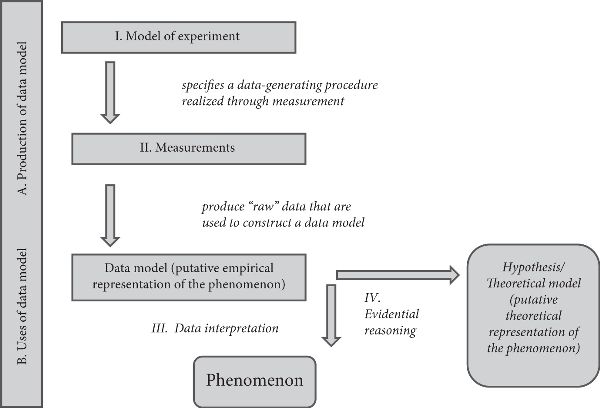

It is natural to begin this collection with Ronald Giere’s “Models of Experiments” because it presents the most general, overall schema for the description of scientific modeling and experimenting: a model of modeling, one might say. At the same time, precisely because of its clarity and scope, it leads into a number of controversies taken on by the other participants.

Ron Giere’s construal of the process of constructing and evaluating models centers on the comparison between models of the data and fully specified representational models. Fully specified representational models are depicted as resulting from other models. They are obtained from more general representational models by giving values to all the variables that appear in those models. This part of Giere’s model of the modeling process (briefly, his meta-model) represents the components of a theoretical activity, but it also comprises the insertion of empirical information, the information required to give values to the variables that characterize a specific experimental situation.

Giere’s account gives a rich representation of the different elements that are involved in the activities of producing, on the one hand, theoretical models and, on the other hand, data models. It also makes clear that these activities are neither purely theoretical nor purely empirical.

In Giere’s meta-model, data models are obtained from models of experiments. It should be noted, however, that they are not obtained from models of experiments in the same sense as fully specified representational models are obtained from representational models. Strictly speaking, one should say that data models are obtained from a transformation of what Giere calls “recorded data,” data resulting from measuring activity, which itself results from running an experiment. And these operations also appear in his meta-model. But a model of the experiment is a representation of the experimental setup that produces the data from which the data model is constructed. So data models are obtained from models of experiments in the sense that the model of the experiment presents a source of constraint on what kind of data can be obtained, and thereby a constraint on the data model. It is this constraint that, in Giere’s model of the modeling process, the arrow from the model of the experiment to the data model represents.

Another way to read the relation represented on Giere’s schema between data model and model of the experiment is to see it as indicating that understanding a data model requires understanding the process that produced it, and thereby understanding the model of the experiment.

In the same way as the activity involved in producing theoretical models for comparison with data models is not exclusively theoretical, because it requires the use of empirical information, the activity involved in producing data models is not exclusively empirical. According to Giere, a model of the experiment is a map of the experimental setup that has to include three categories of components: material (representation of the instruments), computational (computational network involved in producing models of the data from instruments’ input), and agential (operations performed by human agents). Running the experiment, from the use of instruments to the transformation of data resulting in the data model, requires a certain understanding of the functioning of the instruments and of the computational analysis of the data which will be, to a variable extent, theoretical.

Because of the generality and large scope of Giere’s meta-model, it leaves open many topics that are taken up later on in the collection. One might find it surprising that the notion of phenomenon does not appear here and wonder where it could be placed. One possibility would be to locate it within “the world.” But in Giere’s meta-model, the world appears as something that is given, neither produced nor constrained by any other component of the modeling process. As we will see in Joseph Rouse’s essay, to merely locate phenomena within the world “as given” betrays the normative dimension that is involved in recognizing something as a phenomenon, which is very different from recognizing something as simply part of the world. What one might find missing also in Giere’s model is the dynamical, interactive relation between the activities that produce theoretical and data models, which is emphasized in Anthony Chemero’s contribution, and again in Michael Weisberg’s. In Giere’s schema, these activities are only related through their product when they are compared. Joe Rouse’s contribution calls that into question as well in his discussion of the role of phenomena in conceptual understanding.

Thus, we see how Giere’s presentation opens the door to a wide range of issues and controversies. In Giere’s schema, the model of the experiment, just like the world, and like phenomena if they are mere components of the world, appears as given. We do not mean, of course, that Giere is oblivious to how the model of the experiment comes about; it is not created ex nihilo. But in this schema it is left aside as yet how it is produced or constrained by other components of the modeling process. It is given, and it is descriptive: a description of the experimental setup. But scientists may be wrong in their judgment that something is a phenomenon. And they may be wrong, or on the contrary show remarkable insight, in their conception of what needs and what does not need to be measured to further our understanding of a certain object of investigation. Arguably, just like for phenomena, and to allow for the possibility of error, the conception of the model of the experiment needs to integrate a normative dimension. In that view, when one offers a model of the experiment, one is not simply offering a description but also making a judgment about what needs to be, what should be, measured. As we will see, the addition of this normative dimension seems to be what most clearly separates Chemero’s from Giere’s approach to the model of the experiment.

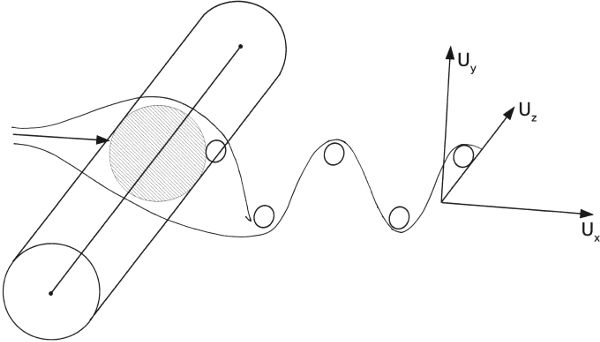

In Anthony Chemero’s “Dynamics, Data, and Noise in the Cognitive Sciences,” a model of the experiment is of a more general type than it is for Giere. In Chemero’s sense it is not a specific experimental arrangement but rather a kind of experimental arrangement, defined in terms of what quantities it is able to measure, without specifying the details of what instruments to use or how.

Chemero comes to issues in the philosophy of modeling and its experimental components from the perspective of dynamical modeling in cognitive science. This clear and lucid discussion of dynamical modeling combines with an insightful philosophical reflection on some of the Chemero’s own experimental work. The essay makes two main contributions of particular relevance to our overall topic, both at odds with traditional views on the experimental activity associated with model testing. The first one is to provide a clear counterexample to what Chemero calls “the methodological truism”: “The goal of a good experiment is to maximize primary variance, eliminate secondary variance, and minimize error variance.”

The primary variance is the evolution of the variable of interest, or systematic variance of the dependent variable, under the effect of some other, independent variables that are under control. The secondary variance is the systematic variance of an independent variable resulting from the effect of variables other than the independent variables. The error variance is what is typically referred to as “noise”: unsystematic variance of the dependent variable. The methodological truism asserts, in effect, that noise is not informative and is only polluting the “real” signal, the real evolution of the variable of interest. So it should be minimized and what remains will be washed out by using some measure of the central tendency of the collection of measurements of the dependent variable, such as the mean.

As counterexample to the methodological truism, Chemero presents a case study where the noise is carrying information about the very phenomenon under investigation.

Chemero’s second aim is to illustrate a normative notion of the model of the experiment and, at the same time, to show that to construct a good experimental setup is not just a matter of technical skills but also a matter of determining what quantities to measure to obtain empirical evidence for a target phenomenon. Along the way it is also shown that dynamical models in cognitive science are not simply descriptions of phenomena but fully deserve to be considered as explanations as well.

The case study that Chemero analyzes uses a Haken-Kelso-Bunz model of coordination dynamics. This model has been famously used to model the dynamics of finger wagging, predicting, for instance, that beyond a certain rate only one of two possible behaviors is stable, namely in-phase movement. But it has also been applied to many other topics of study in cognitive science, from neural coordination dynamics to speech production to interpersonal coordination. Chemero discusses the use of this model to investigate the transition described by Heidegger between readiness-to-hand and unreadiness-to-hand: “The dynamical model was used to put Heidegger’s claims about phenomenology into touch with potentially gatherable data.”

Given the project to test Heidegger’s idea of a transition between two forms of interactions with tools—ready-to-hand and unready-to-hand—the challenge was to determine what sort of quantities could serve as evidence for this transition. To determine what the experimental setup needs to be is to offer a model of the experiment. To offer such a model is not just to give a description of experimental arrangements, it is to make a claim about what sort of quantities can serve as evidence for the phenomenon of interest and thus need to be measured. It is a normative claim; and as Rouse’s essay will make clear, it is defeasible. Scientists may come to revise their view about what sorts of quantities characterize a phenomenon—and that is especially so in cognitive science.

The experimenters arrive at a model of the experiment by first interpreting the notion of ready-to-hand in terms of an interaction-dominant system: to be in a ready-to-hand form of interaction with a tool is to form an interaction-dominant system with the tool. This means a kind of system where “the interactions are more powerful than the intrinsic dynamics of the components” and where the components of the system cannot be treated in isolation. An interaction-dominant system exhibits a special variety of fluctuation called 1/f noise or pink noise (“a kind of not-quite-random, correlated noise”), and the experimenters take it that evidence for interaction dominance will be evidence for the ready-to-hand form of interaction.

That is how the methodological truism is contradicted: in that case, noise is not something that is simply altering the informative part of the signal, not something that should be minimized and be compensated for. Instead, it is a part of the signal that is carrying information, information about the same thing that the systematic variation of the primary variable carries information about. As we will see below, Knuuttila and Loettgers make a similar point in their essay in the context of experimental modeling of biological mechanisms.

Interpretation and representation are, it is said, two sides of one coin. St. Elmo’s fire may be represented theoretically as a continuous electric discharge; observations of St. Elmo’s fire provide data that may be interpreted as signs of a continuous electric discharge. To see St. Elmo’s fire as a phenomenon to be modeled as plasma—ionized air—rather than as fire is to see it through the eyes of theory; it is possible only if, in the words of Francis Bacon, “experience has become literate.” But the relation between theory and world is not unidirectional; the phenomena, Joseph Rouse argues in his essay, contribute to conceptual understanding.

Rouse’s contribution addresses the question of what mediates between model and world by focusing on the issue of conceptual understanding. The link between model and world is clarified as the argument unfolds to show that the phenomena play this mediating role. This may be puzzling at first blush: how can the phenomena play a role in conceptual understanding, in the development and articulation of concepts involved in explaining the world with the help of models? According to Rouse both what conceptual articulation consists in, and the role that phenomena play in this articulation, have been misunderstood.

Traditionally viewed, conceptual articulation is a theoretical activity. About the only role that phenomena play in this activity is as that which this activity aims to describe. Phenomena are seen as what theories are about and what data provide evidence for (Bogen and Woodward 1988). When models and world are put in relation with one another, the concepts are already there to be applied and phenomena are already there to be explained. As Rouse says, “[the relation between theory and the world] comes into philosophical purview only after the world has already been conceptualized.” And the aim of experimental activity, from this perspective, is to help determine whether concepts actually apply to the world. Issues related to experimental activity are seen as mainly technical, as a matter of creating the causal conditions prescribed by the concepts and models that we are trying to apply.

Rouse’s move away from this view on conceptual articulation as mainly theoretical, on phenomena as always already conceptualized, and on experimental activity as a technical challenge starts with giving to phenomena the role of mediator between models and world. Phenomena are not some more or less hidden parts of the world that will hopefully, with enough luck and technical skill, be discovered. Instead, they contribute to the conceptual development and articulation through which we make the world’s complexity intelligible.

How do phenomena play this mediating role? First of all, phenomena could not be mediators if they were merely objects of description (or prediction or explanation): “Phenomena show something important about the world, rather than our merely finding something there.” Phenomena’s mediating role starts with and is grounded in the recognition of their significance. The recognition of their significance is not a descriptive, empirical judgment. It is a normative judgment. It is as normative as saying that a person is good, and just as open to revision. The question of whether they are discovered or constructed is pointless. It is like asking whether the meter unit is discovered or constructed. What matters is the normative judgment that makes the one-meter length into a unit. Similarly, with phenomena what matters is the judgment of their significance, that they show something important about the world.

Saying that something is a phenomenon is to make a bet: what we are betting is what is at stake in making this normative judgment. We are betting that it will enable us to see new things that we were not able to see before—new patterns, new regularities. That is the important thing about the world that the phenomenon is taken to show: the possibility of ordering the world in terms similar to it, and to use these terms to create intelligibility, order, and to make some new judgments of similarity and differences, in the same way as we do with the meter unit.

Rouse makes clear that normativity does not imply self-vindication. On the contrary, we may have to recognize that what we took for a phenomenon does not actually show anything important about the world. That is why there is something at stake in judging that something is a phenomenon. There would be nothing at stake if the judgment were not revisable.

So how do phenomena contribute to conceptual articulation? One tempting mistake at this point would be to think that they do so by providing the conditions in which concepts are applied. We cannot reduce a concept to what is happening under some specific conditions. Phenomena may serve as an anchor for concepts, an epicenter, but they point beyond themselves at the larger world, and that is where the domain of application of concepts lies. Once a pattern has been recognized as significant (“outer recognition”), the issue is “how to go on rightly”—that is, how to correctly identify other cases as fitting this same pattern, as belonging to the domain of application of the same concept (“inner recognition”).

Rouse refuses to reduce the domain of application of concepts to the conditions in which phenomena are obtained, but these conditions are important to his story. To understand how phenomena can play a role without becoming a trap, a black hole for concepts that would exhaust their content, we need to introduce the notion of an experimental system. Perhaps the most important aspect of experimental systems is that they belong to a historical and dynamic network of “systematically interconnected experimental capacities.” What matters, in the story of how phenomena contribute to conceptual articulation, “is not a static experimental setting, but its ongoing differential reproduction, as new, potentially destabilizing elements are introduced into a relatively well-understood system.” It is the development of this experimental network that shows how concepts can be applied further and how conceptual domains can be articulated.

Crucially then, in Rouse’s account, in the same way as there is no way to tell in advance how the experimental network will extend, there is no way to tell in advance how concepts will apply elsewhere, how they will extend further. But what is certain is that the concepts that we have make us answerable in their terms to what will happen in the course of the exploration of new experimental domain: “Concepts commit us to more than we know how to say or do.” In some cases, as experimental domains extend, the difficulties may be regarded as conceptually inconsequential, but in other cases they may require a new understanding of the concepts we had, to “conceptually re-organize a whole region of inquiry.”

Tarja Knuuttila and Andrea Loettgers present an exciting and empirically informed discussion of a new form of mediation between models and experiments. Their subject is the use of a new kind of models, synthetic models, which are systems built from genetic material and implemented in a natural cell environment.

The authors offer an in-depth analysis of the research conducted with two synthetic models, the Repressilator and a dual feedback oscillator. In both cases the use of these models led to new insights that could not have been obtained with just mathematical models and traditional experiments on model organisms. The new insights obtained are distinct contributions of intrinsic and extrinsic noise in cell processes in the former case and of an unanticipated robustness of oscillatory regime in the latter.

Elsewhere on the nature of synthetic models, Knuuttila and Loettgers contrast their functioning and use to that of another genetically engineered system, genetically modified Escherichia coli bacteria (Knuuttila and Loettgers 2014). As they explain, this system was used as a measuring system by virtue of its noise-sensing ability. By contrast, the synthetic models are synthetic systems capable of producing a behavior.

After a review of the debate on the similarities and dissimilarities between models and experiments, and using their case study in support, the authors argue that the epistemic function that synthetic models are able to play is due to characteristics they share both with models and with experiments. Thus, the discussion by Knuuttila and Loettgers of synthetic systems sheds a new light on the debate concerning the similarities and dissimilarities between models and experiments. They acknowledge some similarities but highlight certain differences, in particular the limited number of the theoretical model’s components and the specific materiality of the experimental system, on a par with the system under investigation.

What makes the synthetic systems so peculiar is that some of the characteristics they share with models and with experimental systems are those that make models and experiments epistemically dissimilar. What such systems share with models is that by contrast with natural systems they are constructed on the basis of a model—a mathematical model—and their structure only comprises a limited number of interactive components. What they share with experiments is that when placed in natural conditions they are able to produce unanticipated behavior: they start having “a life of their own.” And it is precisely by virtue of this life of their own that they could lead to new insights when their behavior is not as expected.

It may be surprising to think that the construction of a system in accordance with a theoretical model could be seen as something new. After all, experimental systems that are constructed to test a model are constructed according to the model in question. (That is what it is, in Nancy Cartwright’s suggestive phrase, to be a nomological machine.) But the object of investigation and source of insight in the experiments described by Knuuttila and Loettgers are not the behavior of the synthetic model per se but rather its behavior in natural conditions. Such a synthetic system is not a material model, constructed to be an object of study and a source of claims about some other system, of which it is supposed to be a model. The point of the synthetic system is to be placed in natural conditions, and the source of surprise and insight is the way it behaves in such conditions. What these systems then make possible is to see how natural conditions affect the predicted behavior of the model. That is possible because, by contrast with the mathematical model used as a basis to produce it, the synthetic model is made of the same sort of material as appears in those natural conditions. Synthetic models are also different from the better known “model organisms.” The difference is this: whereas the latter have the complexity and opacity of natural systems, the former have the simplicity of an engineered system. Like models and unlike organisms, they are made up of a limited number of components. And this feature, also, as the authors make clear, is instrumental to their innovative epistemic function.

The philosophical fortunes of the concept of causation in the twentieth century could be the stuff of a gripping novel. Russell’s dismissal of the notion of cause in his “Mysticism and Logic” early in the twentieth century was not prophetic of how the century would end. Of the many upheavals in the story, two are especially relevant to the essays here. One was the turn from evidential decision theory to causal decision theory, in which Nancy Cartwright’s “Causal Laws and Effective Strategies” (1979) was a seminal contribution. The other was the interventionist conception of causal models in the work of Glymour, Pearl, and Woodward that, arguably, had become the received view by the year 2000. In the essays by Cartwright and by Jenann Ismael, we will see the implications of this story for both fundamental philosophical categories and practical applications.

Nancy Cartwright addresses the question of what would be good evidence that a given policy will be successful in a given situation. Will the results of implementing the policy in a certain situation serve as a basis to anticipate the results of the implementation in the new situation? Her essay discusses and compares the abilities of experiments and models to provide reliable evidence. Note well that she is targeting a specific kind of experiment—randomized control trial (RCT) experiments—and a specific kind of model: “purpose-built single-case causal models.”

For the results of the implementation of a policy to count as good evidence for the policy’s efficacy, the implementation needs to meet the experimental standards of a well-designed and well-implemented RCT. But even when that is the case, the evidence does not transfer well, according to Cartwright, to a new situation.

A well-designed and well-implemented RCT can, in the best cases, clinch causal conclusions about the effect of the treatment on the population that received the treatment, the study population. That is, if the experiment comprises two populations that can be considered identical in all respects except for receiving versus not receiving the treatment, and if a proper measurement shows an effect in the study population, then the effect can be reliably attributed to the causal effect of the treatment. The question is whether these conclusions, or conclusions about the implementation of a policy in a given situation on a given population, can travel to a new target population/situation.

According to Cartwright, what the results of a RCT can show is that in the specific conditions of the implementation the implementation makes a difference. But it says nothing about the difference it might make in a new situation. For, supposing the implementation was successful, there must be several factors that are contributing, directly or indirectly, in addition to the policy itself, to the success of that implementation. Cartwright calls these factors support factors. And some of these factors may not be present in the new situation or other factors may be present that will hamper the production of the outcome.

One might think that the randomization that governs the selection of the populations in the RCT provides a reliable basis for the generalization of results of the experiment. But all it does, Cartwright says, is ensure that there is no feature that could by itself causally account for the difference in results between the populations. That does not preclude that some features shared by the two populations function as support factors in the study population and made the success of the treatment possible. All that success requires is that some members of the population exhibit these features in such a way as to produce a higher average effect in the study population. Given that we do not know who these members are, we are unable to identify the support factors that made the treatment successful in these individuals.

Cartwright contrasts RCT experiments with what she calls purpose-built single-case causal models. A purpose-built single-case causal model will, at minimum, show what features/factors are necessary for the success of the implementation: the support factors. The most informative, though, will be a model that shows the contribution of the different factors necessary for the success of the implementation of the policy in a given situation/population, which may not be present in the new situation.

It might seem that the purpose-built single-case causal model is, in fact, very general because it draws on a general knowledge we have about different factors. The example that Cartwright specifically discusses is the implementation of a policy aiming to improve learning outcomes by decreasing the size of classes. The implementation was a failure in California whereas it had been successful in Tennessee. The reduction of class size produces a need for a greater number of classrooms and teachers; in California, that meant hiring less qualified teachers and using rooms for classes that had previously been used for learning activities. A purpose-built single-case causal model, says Cartwright, could have been used to study, say, the relationship between quality of teaching and quality of learning outcome. The model would draw on general knowledge about the relationship between quality of teaching and quality of learning outcome, but it would be a single case in that it would only represent the support factors that may or may not be present in the target situation. That maintaining teaching quality while increasing sufficiently the number of teachers would be problematic in California is the sort of fact, she says, that we hope the modeler will dig out to produce the model we must rely on for predicting the outcomes of our proposed policy.

But how, one might wonder, do we evaluate how bad it really is to lower the number of learning activities? How do we determine that the quality of the teaching will decrease to such an extent that it will counteract the benefit of class reduction? How do we measure teaching quality in the first place?

According to Cartwright, we do not “have to know the correct functional form of the relation between teacher quality, classroom quality, class size, and educational outcomes to suggest correctly . . . that outcomes depend on a function that . . . increases as teacher and room quality increase and decreases as class size increases.” Still, it seems important, and impossible in the abstract, to evaluate the extent to which the positive effect expected from class reduction will be hampered by the negative effect expected from a decrease in teachers or room quality or number of learning activities.

Cartwright recognizes that an experiment investigating the effect of the policy on a representative sample of the policy target would be able to tell us what we want to know. But it is difficult to produce, in general, a sample that is representative of a population. And, furthermore, as the California example makes clear, the scale at which the policy is implemented may have a significant effect on the result of the implementation.

Without testing the implementation of the policy itself, can we not think of other, less ambitious experiments that would still be informative? Would it be helpful, for instance, to have some quantitative indication of the difference that learning activities or lack of experience of teachers, alone, makes on learning outcomes? If we can hope to have scientists good enough to produce models that take into account just the right factors, could we not hope to have scientists good enough to conceive of experiments to investigate these factors so as to get clearer on their contribution?

Cartwright takes it that “responsible policy making requires us to estimate as best we can what is in this model, within the bounds of our capabilities and constraints.” But aren’t experiments generally the way in which precisely we try to estimate the contribution of the factors that we expect to have an effect on the evolution of the dependent variable that we are interested in?

Cartwright’s discussion gives good reasons to think that the results of an experiment that is not guided by a modeling process might be of little use beyond showing what is happening in the specific conditions in which it is realized. It is not clear, however, that models without experiment can give us the quantitative information that is required for its being a guide for action and decision.

She notes that “the experiment can provide significant suggestions about what policies to consider, but it is the model that tells us whether the policy will produce the outcome for us.” Experiments may not be able to say, just by themselves, what the support factors are nor whether they are present in the target situation/population. But, it seems they may help a good deal once we have an idea of what the support factors are, to get clearer on how these factors contribute to the production of the effect. And they may even be of help in finding some factors that had not been considered but that do, after all, have an effect.

Jenann Ismael insists that philosophers’ focus on the products of science rather than its practice has distorted specifically the discussion of laws and causality. She begins with a reflection on Bertrand Russell’s skeptical 1914 paper on the notion of cause, which predisposed a whole generation of empiricists against that notion, but Ismael sees the rebirth of the concept of causal modeling as starting with Nancy Cartwright’s 1979 argument that causal knowledge is indispensable in the design of effective strategies.

Russell had argued that the notion of cause plays no role in modern natural science, that it should be abandoned in philosophy and replaced by the concept of a global dynamical law. His guiding example was Newton’s physics, which provided dynamical laws expressed in the form of differential equations that could be used to compute the state of the world at one time as a function of its state at another. Russell’s message that the fundamental nomic generalizations of physics are global laws of temporal evolution is characterized by Ismael as a prevailing mistaken conviction in the philosophy of science during much of the twentieth century.

But, as she recounts, the concept of cause appears to be perfectly respectable in the philosophy of science today. While one might point to the overlap between the new focus on modality in metaphysics, such as in the work of David Lewis, and the attention to this by such philosophers of science as Jeremy Butterfield and Christopher Hitchcock, Ismael sees the turning point in Nancy Cartwright’s placing causal factors at the heart of practical decision making. Cartwright’s argument pertained most immediately to the controversy between causal and evidential decision theory at that time. But it also marks the beginning of the development of a formal framework for representing causal relations in science, completed in the “interventionist” account of causal modeling due to Clark Glymour, Judea Pearl (whom Ismael takes as her main example), and James Woodward.

Does causal information outrun the information generally contained in global dynamical laws? To argue that it does, Ismael focuses on how a complex system is represented as a modular collection of stable and autonomous components, the “mechanisms.” The behavior of each of these is represented as a function, and interventions are local modifications of these functions. The dynamical law for the whole can be recovered by assembling these in a configuration that imposes constraints on their relative variation so as to display how interventions on the input to one mechanism propagate throughout the system. But the same evolution at the global level could be realized through alternative ways of assembling mechanisms, hence it is not in general possible to recover the causal information from the global dynamics.

Causality and mechanism are modal notions, as much as the concept of global laws. The causal realism Ismael argues for involves an insistence on the priority of mechanism over law: global laws retain their importance but are the emergent product of causal mechanisms at work in nature.

Eventually, after all the scientific strife and controversy, we expect to see strong evidence brought to us by science, to shape practical decisions as well as worldviews. But just what is evidence, how is it secured against error, how is it weighed, and how deeply is it indebted for its status to what is accepted as theory? In the process that involves data-generating procedures, theoretical reasoning, model construction, and experimentation, finally leading to claims of evidence, what are the normative constraints? The role of norms and values in experimentation and modeling, in assessment and validation, has recently taken its place among the liveliest and most controversial topics in our field.

Deborah Mayo enters the fray concerning evidential reasoning in the sciences with a study of the methodology salient in the recent “discovery” of the Higgs boson. Her theme and topic are well expressed in one of her subheadings: “Detaching the Inferences from the Evidence.” For as we emphasized earlier, the data are not a given; what counts as evidence and how it is to be understood are what is at issue.

When the results of the Higgs boson detection were made public, the findings were presented in the terminology of orthodox (“frequentist”) statistics, and there were immediate critical reactions by Bayesian statisticians. Mayo investigates this dispute over scientific methodology, continuing her long-standing defense of the former (she prefers the term “error statistical”) method by a careful inquiry into how the Higgs boson results were (or should be) formulated. Mayo sees “the main function of statistical method as controlling the relative frequency of erroneous inferences in the long run.” There are different types of error, which are all possible ways of being mistaken in the interpretation of the data and the understanding of the phenomenon on the basis of the data, such as mistakes about what factor is responsible for the effect that is observed. According to Mayo, to understand how to probe the different components of the experiment for errors—which includes the use of instruments, their manipulation and reasoning, and how to control for errors or make up for them—is part of what it is to understand the phenomenon under investigation.

Central to Mayo’s approach is the notion of severity: a test of a hypothesis H is severe if not only does it produce results that agree with H if H is correct, but it also very likely produces results that do not agree with H if H is not correct. Severe testing is the basis of what Mayo calls “argument from error,” the argument that justifies the experimenter’s trust in his or her interpretation of the data. To argue from error is to argue that a misinterpretation of the data would have been revealed by the experimental procedure.

In the experiments pertaining to the Higgs boson, a statistical model is presented of the detector, within which researchers define a “global signal strength” parameter μ, such that μ = 0 corresponds to the detection of the background only (hypothesis H0), and μ = 1 corresponds to the Standard Model Higgs boson signal in addition to the background (hypothesis H). The statistical test records differences in the positive direction, in standard deviation or sigma units. The improbability of an excess as large as 5 sigma alludes to the sampling distribution associated with such signal-like results or “bumps,” fortified with much cross-checking. In particular, the probability of observing a result as extreme as 5 sigma, under the assumption it was generated by background alone—that is, assuming that H0 is correct—is approximately 1 in 3,500,000.

Can this be summarized as “the probability that the results were just a statistical fluke is 1 in 3,500,000”? It might be objected that this fallaciously applies the probability to H0 itself—a posterior probability of H0. Mayo argues that this is not so.

The conceptual distinctions to be observed are indeed subtle. H0 does not say the observed results are due to background alone, although if H0 were true (about what is generating the data), it follows that various results would occur with specified probabilities. The probability is assigned to the observation of such large or larger bumps (at both sites) on the supposition that they are due to background alone. These computations are based on simulating what it would be like under H0 (given a detector model). Now the inference actually detached from the evidence is something like There is strong evidence for H. This inference does indeed rely on an implicit principle of evidence—in fact, on a variant of the severe or stringent testing requirement for evidence. There are cases, regrettably, that do commit the fallacy of “transposing the conditional” from a low significance level to a low posterior to the null. But in the proper methodology, what is going on is precisely as in the case of the Higgs boson detection. The difference is as subtle as it is important, and it is crucial to the understanding of experimental practice.

Eric Winsberg’s “Values and Evidence in Model-Based Climate Forecasting” is similarly concerned with the relation between evidence and inference, focusing on the role of values in science through a cogent discussion of the problem of uncertainty quantification (UQ) in climate science—that is, the challenging task of attaching a degree of uncertainty to climate models predictions. Here the role of normative decisions becomes abundantly clear.

Winsberg starts from a specific argument for the ineliminable role of social or ethical values in the epistemic endeavors and achievements of science, the inductive risk argument. It goes like this: given that no scientific claim is ever certain, to accept or reject it is to take a risk and to make the value judgment that it is, socially or ethically, worth taking. No stretch of imagination is needed to show how this type of judgment is involved in controversies over whether, or what, actions should be taken on the basis of climate change predictions.

Richard Jeffrey’s answer to this argument was that whether action should be taken on the basis of scientific claims is not a scientific issue. Science can be value-free so long as scientists limit themselves to estimating the evidential probability of their claims and attach estimates of uncertainties to all scientific claims to knowledge. Taking the estimation of uncertainties of climate model predictions as an example, Winsberg shows how challenging this probabilistic program can be, not just technically but also, and even more importantly, conceptually. In climate science, the method to estimate uncertainties uses an ensemble of climate models obtained on the basis of different assumptions, approximations, or parametrizations. Scientists need to make some methodological choices—of certain modeling techniques, for example—and those choices are not, Winsberg argues, always independent of non-strictly-epistemic value judgments. Not only do the ensemble methods rely on presuppositions about relations between models that are clearly incorrect, especially the presupposition that they are all independent or equally reliable, but it is difficult to see how it could be possible to correct them. The probabilistic program cannot then, Winsberg concludes, produce a value-free science because value judgments are involved in many ways in the very work that is required to arrive at an estimation of uncertainty. The ways they are involved make it impossible to trace these value judgments or even to draw a clear line between normative and epistemic judgments. What gets in the way of drawing this line, in the case of climate models, is that not only are they complex objects, with different parts that are complex and complexly coupled, but they also have a complex history.

Climate models are a result of a series of methodological choices influencing “what options will be available for solving problems that arise at a later time” and, depending on which problems will arise, what will be the model’s epistemic successes or failures. What Joseph Rouse argues about concepts applies here to methodological choices: we do not know what they commit us to. This actual and historical complexity makes it impossible to determine beforehand the effects of the assumptions and choices involved in the construction of the models. For this very reason, the force of his claims, Winsberg says, will not be supported by a single, specific example of value judgment that would have influenced the construction or assessment of climate models. His argument is not about some value judgments happening here or there; it is about how pervasive such judgments are, and about their “entrenchment”—their being so intimately part of the modeling process that they become “mostly hidden from view . . . They are buried in the historical past under the complexity, epistemic distributiveness, and generative entrenchment of climate models.”

While Winsberg’s essay focuses on the interplay of values and criteria in the evaluation of models in climate science, Michael Weisberg proposes a general theory of model validation with a case study in ecology.

What does it mean that a model is good or that one model is better than another one? Traditional theories of confirmation are concerned with the confirmation of claims that are supposed to be true or false. But that is exactly why they are not appropriate, Michael Weisberg argues, for the evaluation of idealized models—which are not, and are not intended to be, truthful representations of their target. In their stead, Weisberg proposes a theory of model validation.

Weisberg illustrates this problem of model evaluation with two models in contemporary ecology. They are models of a beech forest in central Europe that is composed of regions with trees of various species, sizes, and ages. The resulting patchy pattern of the forest is what the models are supposed to account for. Both models are cellular automata: an array of cells that represents a patch of forest of a certain kind and a set of transition rules that, step by step, take each of the cells from one state to another. The transition rules determine at each step what each cell represents based on what the cell and the neighboring cells represented at the previous step. One of the models is more sophisticated than the other: the representation and transition rules take into account a larger number of factors that characterize the forest (e.g., the height of the trees). It is considered a better model than the other one, but it is still an idealization; and the other one, in spite of its being simpler, is nevertheless regarded as at least partially validated and also explanatory.

Weisberg’s project is to develop an account of model validation that explains the basis on which a model is evaluated and what makes one model better than another one. His account builds on Ronald Giere’s view that it is similarities between models and real systems that make it possible for scientists to use models to represent real systems. Following Giere, Weisberg proposes to understand the validation of models as confirmation of theoretical hypotheses about the similarity between models and their intended targets. Weisberg’s account also follows Giere in making the modeler’s purpose a component of the evaluation of models: different purposes may require different models of the same real system and different forms of similarities between the model and the target.

In contrast to Giere, who did not propose an objective measure of similarity, Weisberg’s account does. The account posits a set of features of the model and the target, and Weisberg defines the similarity of a model to its target as “a function of the features they share, penalized by the features they do not share”; more precisely, “it is expressed as a ratio of shared features to non-shared features.” Weisberg’s account is then able to offer a more precise understanding of “scientific purpose.” For example, if the purpose is to build a causal model then the model will need to share the features that characterize the mechanism producing the phenomenon of interest. If the purpose includes simplicity, the modeler should minimize in the model the causal features that are not in the target. By contrast, if the purpose is to build a “how-possible” model, what matters most is that the model shares the static and dynamic features of the target. The way in which the purpose influences the evaluation appears in the formula through a weighting function that assigns a weight to the features that are shared and to those that are not shared. The weight of these features expresses how much it matters to the modeler that these features are shared or not shared and determines the way in which their being shared or not shared influences the evaluation.

This account enables Weisberg to explain why it may not be possible to satisfy different purposes with a single model and why some trade-offs might be necessary. It also enables him to account for what makes, given a purpose, one model better than another one.

And it enables him to account for another really important and neglected aspect of modeling and model evaluation: its iterative aspect. As we saw, the formula includes a weighting function that ascribes weights to the different features to express their relative importance. The purpose of the modeler indirectly plays a role in determining the weighting function in that it determines what the model needs to do. But what directly determines, given the purpose, what the weighting function has to be is some knowledge about what the model has to be like in order to do what the purpose dictates. In some cases, this knowledge will be theoretical: to do this or that the model should have such and such features and should not or does not need to have such and such other features. In some cases, however, Weisberg points out, there will be no theoretical basis to determine which features are more important. Instead, the weights may have to be iteratively adjusted on the basis of a comparison between what the model does and the empirical data that need to be accounted for, until the model does what it is intended to do. That will involve a back and forth between the development of the model and the comparison with the data.

Weisberg speaks here of an interaction between the development of the model and the collection of data. Such an interaction suggests that the development of the model has an effect on the collection of the data. It is an effect that is also discussed in other contributions (Chemero, Knuuttila and Loettgers, and Rouse). It is not clear, at first, how that would happen under Weisberg’s account because it seems to take the intended target, with its features, and the purpose for granted in determining the appropriate validation formula. But it does not need to do so. The model may suggest new ways to investigate the target, for example, by looking for some aspects of the phenomenon not yet noticed but predicted by the model. Finally, Weisberg discusses the basis on which a validated model can be deemed a reliable instrument to provide new results.

In addition to the validation of the model, what is needed, Weisberg explains, is a robustness analysis that shows that the results of the model are stable through certain perturbations. The modelers generally not only want the model to provide new results about the target it was tested against but also want these results to generalize to similar targets. Weisberg does not seem to distinguish between these expectations here. In contrast, Cartwright’s essay makes clear that to project the results of a model for one situation to a new situation may require much more than a robustness analysis.

Symposium on Measurement

Deborah Mayo rightly refers to measurement operations as data-generating procedures. But a number or text or graphics generating procedure is not necessarily generating data: what counts as data, as relevant data, or as evidence, depends on what is to be accounted for in the relevant experimental inquiry or to be represented in the relevant model. If a specific procedure is a measurement operation then its outcomes are values of a specific quantity, but whether there is a quantity that a specific procedure measures, or which quantity that is, and whether it is what is represented as that quantity in a given model, is generally itself what is at stake.

In this symposium, centering on Paul Teller’s “Measurement Accuracy Realism,” the very concept of measurement and its traditional understanding are subjected to scrutiny and debate.

Van Fraassen’s introduction displays the historical background in philosophy of science for the changing debates about measurement, beginning with Helmholtz in the nineteenth century. The theory that held center stage in the twentieth was the representational theory of measurement developed by Patrick Suppes and his coworkers. Its main difficulties pertained to the identification of the quantities measured. The rival analytic theory of measurement proposed by Domotor and Batitsky evades this problem by postulating the reality of physical quantities of concern to the empirical sciences. Both theories are characterized, according to van Fraassen, by the sophistication of their mathematical development and paucity of empirical basis. It is exactly the problem of identifying the quantities measured that is the target of Paul Teller’s main critique, which goes considerably beyond the traditional difficulties that had been posed for those two theories of measurement.

First of all, Teller challenges “traditional measurement-accuracy realism,” according to which there are in nature quantities of which concrete systems have definite values. This is a direct challenge to the analytic theory of measurement, which simply postulates that. But the identification of quantities through their pertinent measurement procedures, on which the representationalist theory relied, is subject to a devastating critique of the disparity between, on the one hand, the conception of quantities with precise values, and on the other, what measurement can deliver. The difficulties are not simply a matter of limitations in precision, or evaluation of accuracy. They also derive from the inescapability of theory involvement in measurement. Ostensibly scientific descriptions refer to concrete entities and quantities in nature, but what are the referents if those descriptions can only be understood within their theoretical context? A naïve assumption of truth of the theory that supplies or constitutes the context might make this question moot, but that is exactly the attitude Teller takes out of play. Measurement is theory-laden, one might say, but laden with false theories! Teller argues that the main problems can be seen as an artifact of vagueness, and applies Eran Tal’s robustness account of measurement accuracy to propose ways of dealing, with vagueness and idealization, to show that the theoretical problems faced by philosophical accounts of measurement are not debilitating to scientific practice.

In his commentary, van Fraassen insists that the identification of physical quantities is not a problem to be evaded. The question raised by Teller, how to identify the referent of a quantity term, is just the question posed in “formal mode” of what it means for a putative quantity to be real. But the way in which this sort of question appears in scientific practice does not answer to the sense it is given in metaphysics. It is rather a way of raising a question of adequacy for a scientific theory which concerns the extent to which values of quantities in its models are in principle determinable by procedures that the theory itself counts as measurements. On his proposal, referents of quantity terms are items in, or aspects of, theoretical models, and the question of adequacy of those models vis à vis data models replaces the metaphysical question of whether quantity terms have real referents in nature.

In his rejoinder, Paul Teller submits that van Fraassen’s sortie to take metaphysics by the horns does not go far enough, the job needs to be completed. And that requires a pragmatist critique. As to that, Teller sees van Fraassen’s interpretation of his, Teller’s, critique of traditional measurement accuracy realism as colored by a constructive empiricist bias. Thus his rejoinder serves to do several things: to rebut the implied criticism in van Fraassen’s comments and to give a larger-scale overview of the differences between the pragmatist and empiricist approaches to understanding science. New in this exchange is Teller’s introduction of a notion of adoption of statements and theories, that has some kinship to van Fraassen’s notion of acceptance of theories but is designed to characterize epistemic or doxastic attitudes that do not involve full belief at any point.

The Historical and Methodological Context

While experimentation and modeling were studied in philosophy of science throughout the twentieth century, their delicate entanglement and mutuality has recently come increasingly into focus. The essays in this collection concentrate on the experimental side of modeling, as well as, to be sure, the modeling side of experimentation.

In order to provide adequate background to these essays, we shall first outline some the historical development of this philosophical approach, and then present in very general terms a framework in which we understand this inquiry into scientific practice. This will be illustrated with case studies in which modeling and experimentation are saliently intertwined, to provide a touchstone for the discussions that follow.

A Brief History

Philosophical views of scientific representation through theories and models have changed radically over the past century.

Early Twentieth Century: The Structure of Scientific Theories

In the early twentieth century there was a rich and complex interplay between physicists, mathematicians, and philosophers stimulated by the revolutionary impact of quantum theory and the theory of relativity. Recent scholarship has illuminated this early development of the philosophy of science in interaction with avant-garde physics (Richardson 1997; Friedman 1999; Ryckman 2005) but also with revolutionary progress in logic and the foundations of mathematics.

After two decades of seminal work in the foundations of mathematics, including the epochal Principia Mathematica (1910–13) with Alfred North Whitehead, Bertrand Russell brought the technique of logical constructs to the analysis of physics in Our Knowledge of the External World (1914) and The Analysis of Matter (1927). Instants and spatial points, motion, and indeed the time and space of physics as well as their relations to concrete experience were subjected to re-creation by this technique. This project was continued by Rudolf Carnap in his famous Der logische Aufbau der Welt (1928) and was made continually more formal, more and more a part of the subject matter of mathematical logic and meta-mathematics. By midcentury, Carnap’s view, centered on theories conceived of as sets of sentences in a formally structured language supplemented with relations to observation and measurement, was the framework within which philosophers discussed the sciences. Whether aptly or inaptly, this view was seen as the core of the logical positivist position initially developed in the Vienna Circle. But by this time it was also seen as contestable. In a phrase clearly signaling discontent, in the opening paragraphs of his “What Theories Are Not” (Putnam 1962), Hilary Putnam called it “the Received View.”

It is not perhaps infrequent that a movement reaches its zenith after it has already been overtaken by new developments. We could see this as a case in point with Richard Montague’s “Deterministic Theories” (Montague 1957), which we can use to illustrate both the strengths and limitations of this approach. Montague stays with the received view of theories as formulated in first-order predicate languages, but his work is rich enough to include a fair amount of mathematics. The vocabulary’s basic expressions are divided, in the Carnapian way, into abstract constants (theoretical terms) and elementary constants (observational terms), with the latter presumed to have some specified connection to scientific observation procedures.

With this in hand, the language can provide us with sufficiently complete descriptions of possible trajectories (“histories”) of a system; Montague can define: “A theory T is deterministic if any two histories that realize T, and are identical at a given time, are identical at all times. Second, a physical system (or its history) is deterministic exactly if its history realizes some deterministic theory.” Although the languages considered are extensional, the discussion is clearly focused on the possible trajectories (in effect, alternative possible “worlds”) that satisfy the theory. Montague announces novel results, such as a clear disconnection between periodicity and determinism, contrary to their intimate relationship as depicted in earlier literature.

But it is instructive to note how the result is proved. First of all, by this definition, a theory that is satisfied only by a single history is deterministic—vacuously, one might say—even if that history is clearly not periodic. Second, given any infinite cardinality for the language, there will be many more periodic systems than can be described by theories (axiomatizable sets of sentences) in that language, and so many of them will not be deterministic by the definition.

Disconcertingly, what we have here is not a result about science, in and by itself, so to speak, but a result that is due to defining determinism in terms of what can be described in a particular language.

Mid-Twentieth Century: First Focus on Models Rather Than Theories

Discontent with the traditional outlook took several forms that would have lasting impact on the coming decades, notably the turn to scientific realism by the Minnesota Center for the Philosophy of Science (Wilfrid Sellars and Grover Maxwell) and the turn to the history of science that began with Thomas Kuhn’s The Structure of Scientific Revolutions (1962), which was first published as a volume in the International Encyclopedia of Unified Science. Whereas the logical positivist tradition had viewed scientific theoretical language as needing far-reaching interpretation to be understood, both these seminal developments involved viewing scientific language as part of natural language, understood prior to analysis.

But the explicit reaction that for understanding scientific representation the focus had to be on models rather than on a theory’s linguistic formulation, and that models had to be studied independently as mathematical structures, came from a third camp: from Patrick Suppes, with the slogan “mathematics, not meta-mathematics!” (Suppes 1962, 1967).

Suppes provided guiding examples through his work on the foundations of psychology (specifically, learning theory) and on physics (specifically, classical and relativistic particle mechanics). In each case he followed a procedure typically found in contemporary mathematics, exemplified in the replacement of Euclid’s axioms by the definition of Euclidean spaces as a class of structures. Thus, Suppes replaced Newton’s laws, which had been explicated as axioms in a language, by the defining conditions on the set of structures that count as Newtonian systems of particle mechanics. To study Newton’s theory is, in Suppes’s view, to study this set of mathematical structures.

But Suppes was equally intent on refocusing the relationship between pure mathematics and empirical science, starting with his address to the 1960 International Congress on Logic, Methodology, and Philosophy of Science, “Models of Data” (Suppes 1962). In his discussion “Models versus Empirical Interpretations of Theories” (Suppes 1967), the new focus was on the relationship between theoretical models and models of experiments and of data gathered in experiments. As he presented the situation, “We cannot literally take a number in our hands and apply it to a physical object. What we can do is show that the structure of a set of phenomena under certain empirical operations is the same as the structure of some set of numbers under arithmetical operations and relations” (Suppes 2002, 4). Then he introduced, if still in an initial sketch form, the importance of data models and the hierarchy of modeling activities that both separate and link the theoretical models to the practice of empirical inquiry:

The concrete experience that scientists label an experiment cannot itself be connected to a theory in any complete sense. That experience must be put through a conceptual grinder . . . [Once the experience is passed through the grinder,] what emerges are the experimental data in canonical form. These canonical data constitute a model of the results of the experiment, and direct coordinating definitions are provided for this model rather than for a model of the theory . . . The assessment of the relation between the model of the experimental results and some designated model of the theory is a characteristic fundamental problem of modern statistical methodology. (Suppes 2002, 7)

While still in a “formal” mode—at least as compared with the writings of a Maxwell or a Sellars, let alone Kuhn—the subject has clearly moved away from preoccupation with formalism and logic to be much closer to the actual scientific practice of the time.

The Semantic Approach

What was not truly possible, or advisable, was to banish philosophical issues about language entirely from philosophy of science. Suppes offered a correction to the extremes of Carnap and Montague, but many issues, such as the character of physical law, of modalities, possibilities, counterfactuals, and the terms in which data may be presented, would remain. Thus, at the end of the sixties, a via media was begun by Frederick Suppe and Bas van Fraassen under the name “the Semantic Approach” (Suppe 1967, 1974, 2000; van Fraassen 1970, 1972).

The name was perhaps not quite felicitous; it might easily suggest either a return to meta-mathematics or alternatively a complete banishing of syntax from between the philosophers’ heaven and earth. In actuality it presented a focus on models, understood independently of any linguistic formulation of the parent theory but associated with limited languages in which the relevant equations can be expressed to formulate relations among the parameters that characterize a target system.

In this approach the study of models remained closer to the mathematical practice found in the sciences than we saw in Suppes’s set-theoretic formulations. Any scientist is thoroughly familiar with equations as a means of representation, and since Galois it has been common mathematical practice to study equations by studying their sets of solutions. When Tarski introduced his new concepts in the study of logic, he had actually begun with a commonplace in the sciences: to understand an equation is to know its set of solutions.

As an example, let us take the equation x2 + y2 = 2, which has four solutions in the integers, with x and y able to take either values +1 or −1. Reifying the solutions, we can take them to be the sequences <+1, +1>, <−1, +1>, <+1, −1>, and <−1, −1>. Tarski would generalize this and give it logical terminology: these sequences satisfy the sentence “x2 + y2 = 2.” So when Tarski assigned sets of sequences of elements to sentences as their semantic values, he was following that mathematical practice of characterizing equations through their sets of solutions. It is in this fashion that one arrives at what in logical terminology is a model. It is a model of a certain set of equations if the sequences in the domain of integers, with the terms’ values as specified, satisfy those equations. The set of all models of the equations, so understood, is precisely the set of solutions of those equations.[1]

The elements of a sequence that satisfy an equation may, of course, not be numbers; they may be vectors or tensors or scalar functions on a vector space, and so forth. Thus, the equation picks out a region in a space to which those elements belong—and that sort of space then becomes the object of study. In meta-mathematics this subject is found more abstractly: the models are relational structures, domains of elements with relations and operations defined on them. Except for its generality, this does not look unfamiliar to the scientist. A Hilbert space with a specific set of Hermitean operators, as a quantum-mechanical model, is an example of such a relational structure.

The effect of this approach to the relation between theories and models was to see the theoretical models of a theory as clustered in ways natural to a theory’s applications. In the standard example of classical mechanics, the state of a particle is represented by three spatial and three momentum coordinates; the state of an N-particle system is thus represented by 3N spatial and 3N momentum coordinates. The space for which these 6N-tuples are the points is the phase space common to all models of N-particle systems. A given special sort of system will be characterized by conditions on the admitted trajectories in this space. For example, a harmonic oscillator is a system defined by conditions on the total energy as a function of those coordinates. Generalizing on this, a theory is presented through the general character of a “logical space” or “state space,” which unifies its theoretical models into families of models, as well as the data models to which the theoretical models are to be related, in specific ways.

Reaction: A Clash of Attitudes and a Different Concept of Modeling

After the death of the Received View (to use Putnam’s term), it was perhaps the semantic approach, introduced at the end of the 1960s, that became for a while the new orthodoxy, perhaps even until its roughly fiftieth anniversary (Halvorson 2012, 183). At the least it was brought into many areas of philosophical discussion about science, with applications extended, for example, to the philosophy of biology (e.g., Lloyd 1994). Thomas Kuhn exclaimed, in his outgoing address as president of the Philosophy of Science Association, “With respect to the semantic view of theories, my position resembles that of M. Jourdain, Moliere’s bourgeois gentilhomme, who discovered in middle years that he’d been speaking prose all his life” (1992, 3).

But a strong reaction had set in about midway in the 1980s, starting with Nancy Cartwright’s distancing herself from anything approaching formalism in her How the Laws of Physics Lie: “I am concerned with a more general sense of the word ‘model.’ I think that a model—a specially prepared, usually fictional description of the system under study—is employed whenever a mathematical theory is applied to reality, and I use the word ‘model’ deliberately to suggest the failure of exact correspondence” (Cartwright 1983, 158–59). Using the term “simulacra” to indicate her view of the character and function of models, she insists both on the continuity with the semantic view and the very different orientation to understanding scientific practice:

To have a theory of the ruby laser [for example], or of bonding in a benzene molecule, one must have models for those phenomena which tie them to descriptions in the mathematical theory. In short, on the simulacrum account the model is the theory of the phenomenon. This sounds very much like the semantic view of theories, developed by Suppes and Sneed and van Fraassen. But the emphasis is quite different. (Cartwright 1983, 159)

What that difference in emphasis leads to became clearer in her later writings, when Cartwright insisted that a theory does not just arrive “with a belly-full” of models. This provocative phrasing appeared in a joint paper in the mid-1990s, where the difference between the received view and the semantic approach was critically presented:

[The received view] gives us a kind of homunculus image of model creation: Theories have a belly-full of tiny already-formed models buried within them. It takes only the midwife of deduction to bring them forth. On the semantic view, theories are just collections of models; this view offers then a modern Japanese-style automated version of the covering-law account that does away even with the midwife. (Cartwright, Shomar, and Suárez 1995, 139)

According to Cartwright and her collaborators, the models developed in application of a theory draw on much that is beside or exterior to that theory, and hence not among whatever the theory could have carried in its belly.

What is presented here is not a different account of what sorts of things models are, but rather a different view of the role of theories and their relations to models of specific phenomena in their domain of application. As Suárez (1999) put it, their slogan was that theories are not sets of models, they are tools for the construction of models. One type of model, at least, has the traditional task of providing accurate accounts of target phenomena; these they call representative models. They maintain, however, that we should not think of theories as in any sense containing the representative models that they spawn. Their main illustration is the London brothers’ model of superconductivity. This model is grounded in classical electromagnetism, but that theory only provided tools for constructing the model and was not by itself able to provide the model. That is, it would not have been possible to just deduce the defining equations of the model in question after adding data concerning superconductivity to the theory.[2]

Examples of this are actually ubiquitous: a model of any concretely given phenomenon will represent specific features not covered in any general theory, features that are typically represented by means derived from other theories or from data.[3]

It is therefore important to see that the turn taken here, in the philosophical attention to scientific modeling in practice, is not a matter of logical dissonance but of approach or attitude, which directs how a philosopher’s attention selects what is important to the understanding of that practice. From the earlier point of view, a model of a theory is a structure that realizes (satisfies) the equations of that theory, in addition to other constraints. Cartwright and colleagues do not present an account of models that contradicts this. The models constructed independently of a theory, to which Cartwright and colleagues direct our attention, do satisfy those equations—if they did not, then the constructed model’s success would tend to refute the theory. The important point is instead that the process of model construction in practice was not touched on or illuminated in the earlier approaches. The important change on the philosophical scene that we find here, begun around 1990, is the attention to the fine structure of detail in scientific modeling practice that was not visible in the earlier more theoretical focus.[4]

Tangled Threads and Unheralded Changes

A brief history of this sort may give the impression of a straightforward, linear development of the philosophy of science. That is misleading. Just a single strand can be followed here, guided by the need to locate the contributions in this volume in a fairly delineated context. Many other strands are entangled with it. We can regard David Lewis as continuing Carnap’s program of the 1970s and 1980s (Lewis 1970, 1983; for critique see van Fraassen 1997). Equally, we can see Hans Halvorson as continuing in as well as correcting the semantic approach in the twenty-first century (Halvorson 2012, 2013; for discussion see van Fraassen 2014a). More intimately entangled with the attention to modeling and experimenting in practice are the writings newly focused on scientific representation (Suárez 1999, 2003; van Fraassen 2008, 2011). But we will leave aside those developments (as well as much else) to restrict this chapter to a proper introduction to the articles that follow. Although the philosophy of science community is by no means uniform in either focus or approach, the new attitude displayed by Cartwright did become prevalent in a segment of our discipline, and the development starting there will provide the context for much work being done today.

Models as Mediators

The redirection of attention to practice thus initiated by Cartwright and her collaborators in the early 1990s was systematically developed by Morgan and Morrison in their contributions to their influential collection Models as Mediators (1999). Emphasizing the autonomy and independence of theory, they describe the role or function of models as mediating between theory and phenomena.

What, precisely, is the meaning of this metaphor? Earlier literature typically assumed that in any scientific inquiry there is a background theory, of which the models constructed are, or are clearly taken to be, realizations. At the same time, the word “of” looks both ways, so to speak: those models are offered as representations of target phenomena, as well as being models of a theory in whose domain those phenomena fall. Thus, the mediation metaphor applies there: the model sits between theory and phenomenon, and the bidirectional “of” marks its middle place.

The metaphor takes on a stronger meaning with a new focus on how models may make their appearance, to represent experimental situations or target phenomena, before there is any clear application of, let alone derivation from, a theory. A mediator effects, and does not just instantiate, the relationship between theory and phenomenon. The model plays a role (1) in the representation of the target, but also (2) in measurements and experiments designed to find out more about the target, and then farther down the line (3) in the prediction and manipulation of the target’s behavior.